c6131064c6467e0927cfdb77b0488dc715aef75d,pymc3/distributions/discrete.py,Categorical,logp,#Categorical#Any#,732

Before Change

np.ndarray,

tt.TensorConstant,

tt.sharedvar.SharedVariable)):

sumto1 = theano.gradient.zero_grad(

tt.le(abs(tt.sum(p_, axis=-1) - 1), 1e-5))

p = p_

else:

p = p_ / tt.sum(p_, axis=-1, keepdims=True)

sumto1 = True

After Change

p = p_ / tt.sum(p_, axis=-1, keepdims=True)

if p.ndim > 1:

pattern = (p.ndim - 1,) + tuple(range(p.ndim - 1))

a = tt.log(p.dimshuffle(pattern)[value_clip])

else:

a = tt.log(p[value_clip])

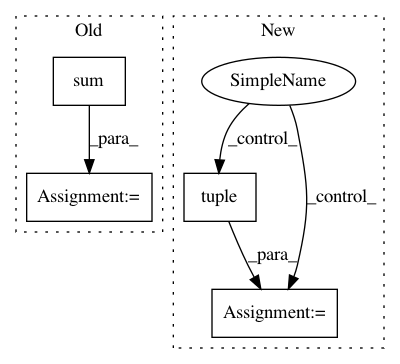

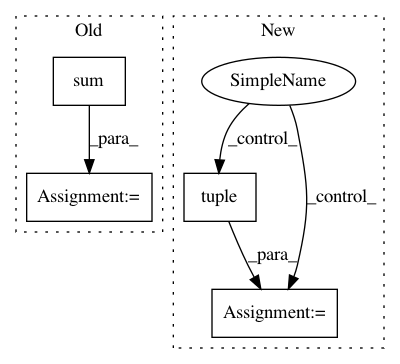

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: pymc-devs/pymc3

Commit Name: c6131064c6467e0927cfdb77b0488dc715aef75d

Time: 2019-02-28

Author: luciano.paz.neuro@gmail.com

File Name: pymc3/distributions/discrete.py

Class Name: Categorical

Method Name: logp

Project Name: cornellius-gp/gpytorch

Commit Name: f76a4dabb4cd38ee58d01a35c5b511e224d060d2

Time: 2018-09-17

Author: gpleiss@gmail.com

File Name: gpytorch/lazy/sum_batch_lazy_tensor.py

Class Name: SumBatchLazyTensor

Method Name: _matmul

Project Name: keras-team/keras

Commit Name: c913b6da92f6ab9a3f4c897caa4085e782a14680

Time: 2018-09-11

Author: rvinas@users.noreply.github.com

File Name: tests/keras/backend/reference_operations.py

Class Name:

Method Name: sum