565f0c88fc5cfbcfd5476da8146e92c793af4834,examples/pytorch/gat/gat.py,GraphAttention,edge_softmax,#GraphAttention#,81

Before Change

// compute the max

self.g.update_all(fn.copy_edge("a", "a"), fn.max("a", "a_max"))

// minus the max and exp

self.g.apply_edges(lambda edges : {"a" : torch.exp(edges.data["a"] - edges.dst["a_max"])})

// compute dropout

self.g.apply_edges(lambda edges : {"a_drop" : self.attn_drop(edges.data["a"])})

// compute normalizer

self.g.update_all(fn.copy_edge("a", "a"), fn.sum("a", "z"))

After Change

// Save normalizer

self.g.ndata["z"] = normalizer

// Dropout attention scores and save them

self.g.edata["a_drop"] = self.attn_drop(scores)

class GAT(nn.Module):

def __init__(self,

g,

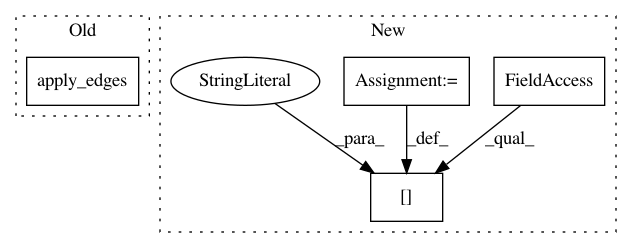

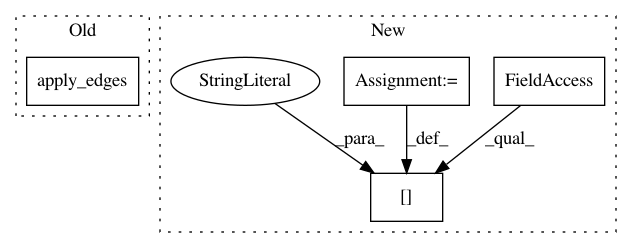

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: dmlc/dgl

Commit Name: 565f0c88fc5cfbcfd5476da8146e92c793af4834

Time: 2019-02-25

Author: minjie.wang@nyu.edu

File Name: examples/pytorch/gat/gat.py

Class Name: GraphAttention

Method Name: edge_softmax

Project Name: dmlc/dgl

Commit Name: dda103d9f6ff7c97b785575a8e753a130886a337

Time: 2021-02-18

Author: coin2028@hotmail.com

File Name: python/dgl/nn/mxnet/conv/edgeconv.py

Class Name: EdgeConv

Method Name: forward

Project Name: dmlc/dgl

Commit Name: dda103d9f6ff7c97b785575a8e753a130886a337

Time: 2021-02-18

Author: coin2028@hotmail.com

File Name: python/dgl/nn/pytorch/conv/edgeconv.py

Class Name: EdgeConv

Method Name: forward