174dd18905f12bfe0b418a4bad3575e6331478a4,python/dgl/nn/pytorch/glob.py,MultiHeadAttention,forward,#MultiHeadAttention#Any#Any#Any#Any#,663

Before Change

e = e / np.sqrt(self.d_head)

// generate mask

mask = th.zeros(batch_size, max_len_x, max_len_mem).to(e.device)

for i in range(batch_size):

mask[i, :lengths_x[i], :lengths_mem[i]].fill_(1)

mask = mask.unsqueeze(1)

e.masked_fill_(mask == 0, -float("inf"))

// apply softmax

alpha = th.softmax(e, dim=-1)

After Change

max_len_x = max(lengths_x)

max_len_mem = max(lengths_mem)

device = x.device

lengths_x = th.tensor(lengths_x, dtype=th.int64, device=device)

lengths_mem = th.tensor(lengths_mem, dtype=th.int64, device=device)

queries = self.proj_q(x).view(-1, self.num_heads, self.d_head)

keys = self.proj_k(mem).view(-1, self.num_heads, self.d_head)

values = self.proj_v(mem).view(-1, self.num_heads, self.d_head)

// padding to (B, max_len_x/mem, num_heads, d_head)

queries = F.pad_packed_tensor(queries, lengths_x, 0)

keys = F.pad_packed_tensor(keys, lengths_mem, 0)

values = F.pad_packed_tensor(values, lengths_mem, 0)

// attention score with shape (B, num_heads, max_len_x, max_len_mem)

e = th.einsum("bxhd,byhd->bhxy", queries, keys)

// normalize

e = e / np.sqrt(self.d_head)

// generate mask

mask = _gen_mask(lengths_x, lengths_mem, max_len_x, max_len_mem)

e = e.masked_fill(mask == 0, -float("inf"))

// apply softmax

alpha = th.softmax(e, dim=-1)

// the following line addresses the NaN issue, see

// https://github.com/dmlc/dgl/issues/2657

alpha = alpha.masked_fill(mask == 0, 0.)

// sum of value weighted by alpha

out = th.einsum("bhxy,byhd->bxhd", alpha, values)

// project to output

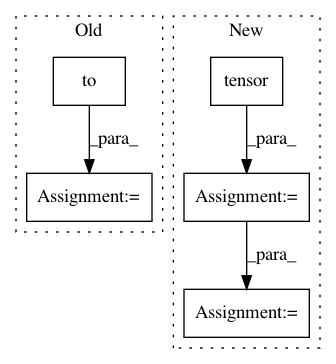

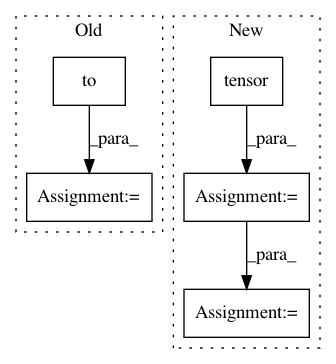

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 5

Instances

Project Name: dmlc/dgl

Commit Name: 174dd18905f12bfe0b418a4bad3575e6331478a4

Time: 2021-02-22

Author: expye@outlook.com

File Name: python/dgl/nn/pytorch/glob.py

Class Name: MultiHeadAttention

Method Name: forward

Project Name: allenai/allennlp

Commit Name: 87a61ad92a9e0129e5c81c242f0ea96d77e6b0af

Time: 2020-08-19

Author: akshita23bhagia@gmail.com

File Name: allennlp/training/metrics/attachment_scores.py

Class Name: AttachmentScores

Method Name: __call__

Project Name: arraiy/torchgeometry

Commit Name: 5c9356d3dbcd44c3cd7f833651a3b542250c2699

Time: 2020-11-30

Author: edgar.riba@gmail.com

File Name: test/enhance/test_core.py

Class Name: TestAddWeighted

Method Name: test_gradcheck

Project Name: allenai/allennlp

Commit Name: 0a456a7582da2ab4271756d7775bba84a75c8c0d

Time: 2020-08-17

Author: eladsegal@users.noreply.github.com

File Name: allennlp/training/metrics/categorical_accuracy.py

Class Name: CategoricalAccuracy

Method Name: __call__