2d25e783797d0918e30de45ab009c35e847fe20b,parlai/scripts/multiprocessing_train.py,,multiprocess_train,#Any#Any#Any#Any#Any#Any#,29

Before Change

with distributed_utils.override_print(suppress_output, print_prefix):

// perform distributed setup, ensuring all hosts are ready

torch.cuda.set_device(opt["gpu"])

dist.init_process_group(

backend="nccl",

init_method="tcp://{}:{}".format(hostname, port),

world_size=opt["distributed_world_size"],

After Change

with distributed_utils.override_print(suppress_output, print_prefix):

// perform distributed setup, ensuring all hosts are ready

if opt["gpu"] != -1:

torch.cuda.set_device(opt["gpu"])

dist.init_process_group(

backend="nccl",

init_method="tcp://{}:{}".format(hostname, port),

world_size=opt["distributed_world_size"],

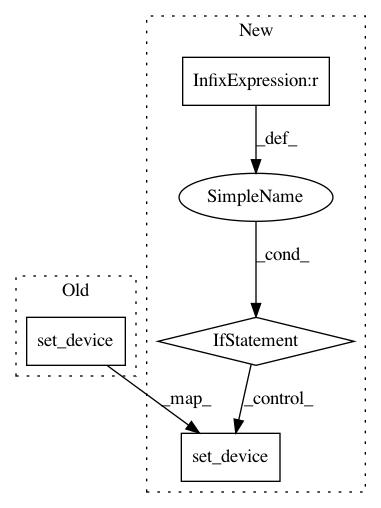

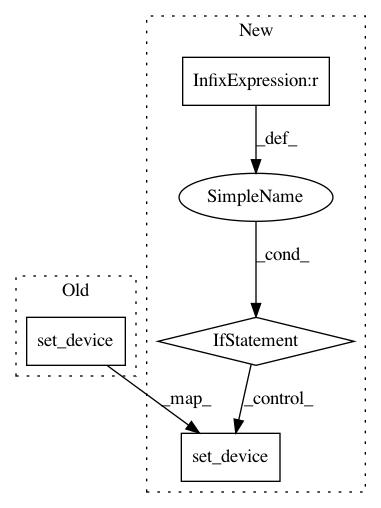

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: facebookresearch/ParlAI

Commit Name: 2d25e783797d0918e30de45ab009c35e847fe20b

Time: 2020-03-23

Author: roller@fb.com

File Name: parlai/scripts/multiprocessing_train.py

Class Name:

Method Name: multiprocess_train

Project Name: dmlc/dgl

Commit Name: 993fd3f94baff137216d2b16dade638f3b6c99c3

Time: 2019-06-08

Author: qh384@nyu.edu

File Name: python/dgl/backend/pytorch/tensor.py

Class Name:

Method Name: copy_to

Project Name: allenai/allennlp

Commit Name: f27475aaeba87052e277df83beaadf002f4fd9da

Time: 2020-05-21

Author: dirkg@allenai.org

File Name: allennlp/commands/train.py

Class Name:

Method Name: _train_worker