aab3902d4a7d55f5a86058854adc36b8a12c873f,catalyst/dl/callbacks/base.py,OptimizerCallback,on_batch_end,#OptimizerCallback#Any#,202

Before Change

scaled_loss = self.fp16_grad_scale * loss.float()

scaled_loss.backward()

master_params = list(optimizer.param_groups[0]["params"])

model_params = list(

filter(lambda p: p.requires_grad, model.parameters())

)

After Change

// or expose another c"tor argument.

if hasattr(optimizer, "_amp_stash"):

from apex import amp

with amp.scale_loss(loss, optimizer) as scaled_loss:

scaled_loss.backward()

else:

loss.backward()

if (self._accumulation_counter + 1) % self.accumulation_steps == 0:

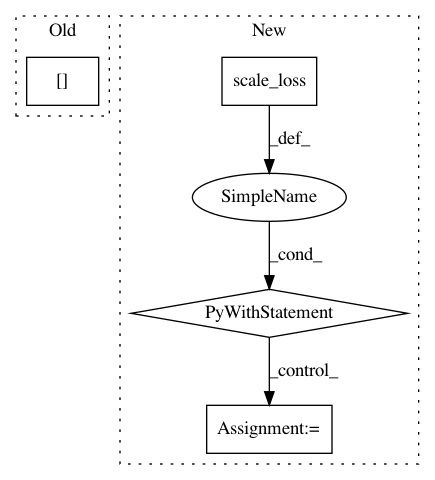

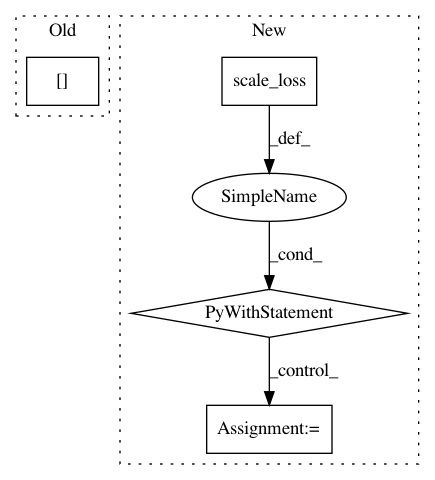

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 4

Instances

Project Name: Scitator/catalyst

Commit Name: aab3902d4a7d55f5a86058854adc36b8a12c873f

Time: 2019-05-20

Author: ekhvedchenya@gmail.com

File Name: catalyst/dl/callbacks/base.py

Class Name: OptimizerCallback

Method Name: on_batch_end

Project Name: layumi/Person_reID_baseline_pytorch

Commit Name: 4888cb7a5299f7eed9214ddc70871dc05ea0f4d2

Time: 2019-03-20

Author: zdzheng12@gmail.com

File Name: model/ft_ResNet50/train.py

Class Name:

Method Name: train_model

Project Name: layumi/Person_reID_baseline_pytorch

Commit Name: 8d57309574a957dd56e14bd9ed961542d34f1d04

Time: 2019-03-09

Author: zdzheng12@gmail.com

File Name: train.py

Class Name:

Method Name: train_model