733f571ff1c5297f798d2829bb4a6fc6e3f3170b,deeplift/models.py,Model,_set_scoring_mode_for_target_layer,#Model#Any#,125

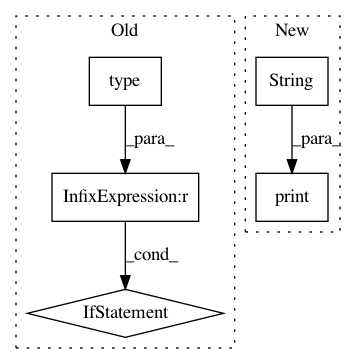

Before Change

deeplift.util.assert_is_type(final_activation_layer,

layers.Activation,

"final_activation_layer")

final_activation_type = type (final_activation_layer).__name__

if (final_activation_type == "Sigmoid"):

scoring_mode=ScoringMode.OneAndZeros

elif (final_activation_type == "Softmax"):

//new_W, new_b =\

// deeplift.util.get_mean_normalised_softmax_weights(

// target_layer.W, target_layer.b)

//The weights need to be mean normalised before they are

//passed in because build_fwd_pass_vars() has already

//been called before this function is called,

//because get_output_layers() (used in this function)

//is updated during the build_fwd_pass_vars()

//call - that is why I can"t simply mean-normalise

//the weights right here :-( (It is a pain and a

//recipe for bugs to rebuild the forward pass

//vars after they have already been built - in

//particular for a model that branches because where

//the branches unify you need really want them to be

//using the same symbolic variables - no use having

//needlessly complicated/redundant graphs and if a node

//is common to two outputs, so should its symbolic vars

//TODO: I should put in a "reset_fwd_pass" function and use

//it to invalidate the _built_fwd_pass_vars cache and recompile

//if (np.allclose(target_layer.W, new_W)==False):

// print("Consider mean-normalising softmax layer")

//assert np.allclose(target_layer.b, new_b),\

// "Please mean-normalise weights and biases of softmax layer"

scoring_mode=ScoringMode.OneAndZeros

else:

raise RuntimeError("Unsupported final_activation_type: "

+final_activation_type)

target_layer.set_scoring_mode(scoring_mode)

def save_to_yaml_only(self, file_name):

raise NotImplementedError() After Change

**kwargs)

def _set_scoring_mode_for_target_layer(self, target_layer):

print("TARGET LAYER SET TO "+str(target_layer.get_name()))

if (deeplift.util.is_type(target_layer,

layers.Activation)):

raise RuntimeError("You set the target layer to an"

+" activation layer, which is unusual so I am"In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances Project Name: kundajelab/deeplift

Commit Name: 733f571ff1c5297f798d2829bb4a6fc6e3f3170b

Time: 2020-01-07

Author: avanti.shrikumar@gmail.com

File Name: deeplift/models.py

Class Name: Model

Method Name: _set_scoring_mode_for_target_layer

Project Name: QUANTAXIS/QUANTAXIS

Commit Name: 3073997a3a8a754a5cc66a5a7e5dfdae00917f00

Time: 2017-11-13

Author: yutiansut@qq.com

File Name: QUANTAXIS/QAFetch/QATdx_adv.py

Class Name: QA_Tdx_Executor

Method Name: get_security_bar_concurrent

Project Name: deepmipt/DeepPavlov

Commit Name: f7062eca7c924ee2a58d1255a4efb06b31b63110

Time: 2017-12-26

Author: ol.gure@gmail.com

File Name: deeppavlov/models/intent_recognition/intent_keras/intent_model.py

Class Name: KerasIntentModel

Method Name: save