0ac2b33e8c63304a50db7d2b484368299706b58b,slm_lab/agent/net/mlp.py,MLPNet,training_step,#MLPNet#Any#Any#Any#Any#Any#,130

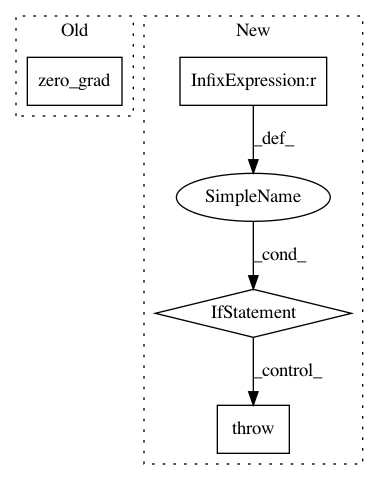

Before Change

More most RL usage, we have custom, often complicated, loss functions. Compute its value and put it in a pytorch tensor then pass it in as loss

"""

self.train()

self.zero_grad()

self.optim.zero_grad()

if loss is None:

out = self(x)

loss = self.loss_fn(out, y)After Change

Takes a single training step: one forward and one backwards pass

More most RL usage, we have custom, often complicated, loss functions. Compute its value and put it in a pytorch tensor then pass it in as loss

"""

if hasattr(self, "model_tails") and x is not None:

raise ValueError("Loss computation from x,y not supported for multitails")

self.lr_scheduler.step(epoch=lr_t)

self.train()

self.optim.zero_grad()

if loss is None:In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances Project Name: kengz/SLM-Lab

Commit Name: 0ac2b33e8c63304a50db7d2b484368299706b58b

Time: 2018-11-14

Author: kengzwl@gmail.com

File Name: slm_lab/agent/net/mlp.py

Class Name: MLPNet

Method Name: training_step

Project Name: rusty1s/pytorch_geometric

Commit Name: 3735f4b48f52f7703944f36284b9e9ee3d1e8e5f

Time: 2020-10-27

Author: matthias.fey@tu-dortmund.de

File Name: examples/tgn.py

Class Name:

Method Name: train

Project Name: kengz/SLM-Lab

Commit Name: 0ac2b33e8c63304a50db7d2b484368299706b58b

Time: 2018-11-14

Author: kengzwl@gmail.com

File Name: slm_lab/agent/net/recurrent.py

Class Name: RecurrentNet

Method Name: training_step