c2165224d198450a3b4329ae099a772aa65d51c5,fairseq/models/levenshtein_transformer.py,LevenshteinTransformerModel,forward_decoder,#LevenshteinTransformerModel#Any#Any#Any#Any#,318

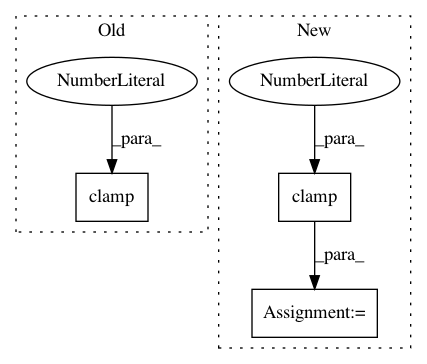

Before Change

if max_ratio is None:

max_lens = output_tokens.new(output_tokens.size(0)).fill_(255)

else:

max_lens = (

(~encoder_out["encoder_padding_mask"]).sum(1) * max_ratio

).clamp(min=10)

// delete words

// do not delete tokens if it is <s> </s>

can_del_word = output_tokens.ne(self.pad).sum(1) > 2After Change

src_lens = encoder_out["encoder_out"].new(bsz).fill_(max_src_len)

else:

src_lens = (~encoder_out["encoder_padding_mask"]).sum(1)

max_lens = (src_lens * max_ratio).clamp(min=10).long()

// delete words

// do not delete tokens if it is <s> </s>

can_del_word = output_tokens.ne(self.pad).sum(1) > 2In pattern: SUPERPATTERN

Frequency: 5

Non-data size: 3

Instances Project Name: elbayadm/attn2d

Commit Name: c2165224d198450a3b4329ae099a772aa65d51c5

Time: 2019-10-08

Author: changhan@fb.com

File Name: fairseq/models/levenshtein_transformer.py

Class Name: LevenshteinTransformerModel

Method Name: forward_decoder

Project Name: facebookresearch/pytext

Commit Name: 78c4f927b3041914694399b5532ac532d8967114

Time: 2019-02-26

Author: mikaell@fb.com

File Name: pytext/loss/loss.py

Class Name: KLDivergenceCELoss

Method Name: __call__

Project Name: cornellius-gp/gpytorch

Commit Name: e5bdc34d07aeabc47f3541a786ae85c74d7b2549

Time: 2018-02-12

Author: gpleiss@gmail.com

File Name: gpytorch/kernels/matern_kernel.py

Class Name: MaternKernel

Method Name: forward

Project Name: ixaxaar/pytorch-dnc

Commit Name: e686d240eef67d6a6595ebff11ba79e6e163adcf

Time: 2017-12-15

Author: root@ixaxaar.in

File Name: dnc/sparse_memory.py

Class Name: SparseMemory

Method Name: read_from_sparse_memory