3b27baf2719698ffe600ff3d33b10c04d2e39f33,solutions/set_expansion/prepare_data.py,,,#,29

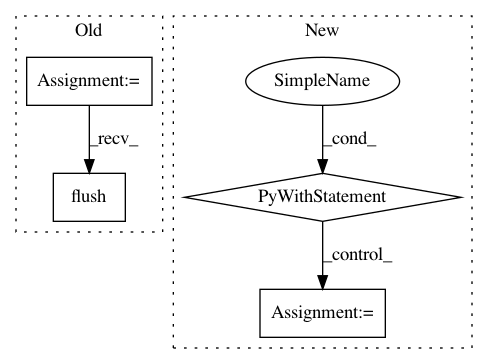

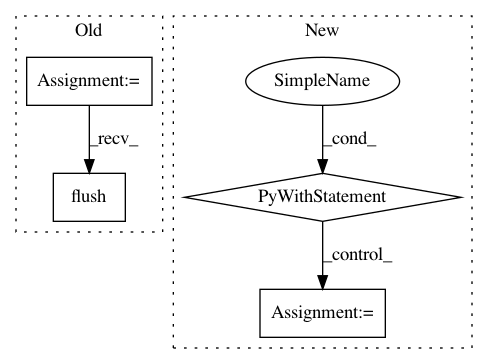

Before Change

else:

corpus_file = open(args.corpus, "r", encoding="utf8")

marked_corpus_file = open(args.marked_corpus, "w", encoding="utf8")

// spacy NP extractor

logger.info("loading spacy")

nlp = spacy.load("en_core_web_sm", disable=["textcat", "parser" "ner"])

logger.info("spacy loaded")

num_lines = sum(1 for line in corpus_file)

corpus_file.seek(0)

logger.info("%i lines in corpus", num_lines)

i = 0

for doc in nlp.pipe(corpus_file):

spans = list()

for p in doc.noun_chunks:

spans.append(p)

i += 1

if len(spans) > 0:

span = spans.pop(0)

else:

span = None

spanWritten = False

for token in doc:

if span is None:

if len(token.text.strip()) > 0:

marked_corpus_file.write(token.text + " ")

else:

if token.idx < span.start_char or token.idx >= span.end_char: // outside a

// span

if len(token.text.strip()) > 0:

marked_corpus_file.write(token.text + " ")

else:

if not spanWritten:

// mark NP"s

if len(span.text) > 1 and span.lemma_ != "-PRON-":

text = span.text.replace(" ", args.mark_char) + args.mark_char

marked_corpus_file.write(text + " ")

else:

marked_corpus_file.write(span.text + " ")

spanWritten = True

if token.idx + len(token.text) == span.end_char:

if len(spans) > 0:

span = spans.pop(0)

else:

span = None

spanWritten = False

marked_corpus_file.write("\n")

if i % 500 == 0:

logger.info("%i of %i lines", i, num_lines)

corpus_file.close()

marked_corpus_file.flush()

marked_corpus_file.close()

After Change

else:

corpus_file = open(args.corpus, "r", encoding="utf8")

with open(args.marked_corpus, "w", encoding="utf8") as marked_corpus_file:

// spacy NP extractor

logger.info("loading spacy")

nlp = spacy.load("en_core_web_sm", disable=["textcat", "parser" "ner"])

logger.info("spacy loaded")

num_lines = sum(1 for line in corpus_file)

corpus_file.seek(0)

logger.info("%i lines in corpus", num_lines)

i = 0

for doc in nlp.pipe(corpus_file):

spans = list()

for p in doc.noun_chunks:

spans.append(p)

i += 1

if len(spans) > 0:

span = spans.pop(0)

else:

span = None

spanWritten = False

for token in doc:

if span is None:

if len(token.text.strip()) > 0:

marked_corpus_file.write(token.text + " ")

else:

if token.idx < span.start_char or token.idx >= span.end_char: // outside a

// span

if len(token.text.strip()) > 0:

marked_corpus_file.write(token.text + " ")

else:

if not spanWritten:

// mark NP"s

if len(span.text) > 1 and span.lemma_ != "-PRON-":

text = span.text.replace(" ", args.mark_char) + args.mark_char

marked_corpus_file.write(text + " ")

else:

marked_corpus_file.write(span.text + " ")

spanWritten = True

if token.idx + len(token.text) == span.end_char:

if len(spans) > 0:

span = spans.pop(0)

else:

span = None

spanWritten = False

marked_corpus_file.write("\n")

if i % 500 == 0:

logger.info("%i of %i lines", i, num_lines)

corpus_file.close()

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 4

Instances

Project Name: NervanaSystems/nlp-architect

Commit Name: 3b27baf2719698ffe600ff3d33b10c04d2e39f33

Time: 2018-07-16

Author: jonathan.mamou@intel.com

File Name: solutions/set_expansion/prepare_data.py

Class Name:

Method Name:

Project Name: probcomp/bayeslite

Commit Name: 6670ca8b881a7a5f094bc12b7705cb45242c77ab

Time: 2015-06-29

Author: riastradh+probcomp@csail.mit.edu

File Name: tests/test_codebook.py

Class Name:

Method Name: test_codebook_value_map

Project Name: Pinafore/qb

Commit Name: 3ada8bc8fb33a7ee25939328babd40ecb6137cf8

Time: 2017-05-12

Author: sjtufs@gmail.com

File Name: qanta/buzzer/hyper_search.py

Class Name:

Method Name: hyper_search

Project Name: nilearn/nilearn

Commit Name: a0d70d5a13d771ba944b4cf2a1c32226eafa393b

Time: 2015-11-04

Author: alexandre.abadie@inria.fr

File Name: nilearn/tests/test_numpy_conversions.py

Class Name:

Method Name: test_csv_to_array