161ae41bebc73c146627169f761e3c4ddf83e5d4,pixyz/losses/losses.py,Divergence,__init__,#Divergence#Any#Any#Any#,237

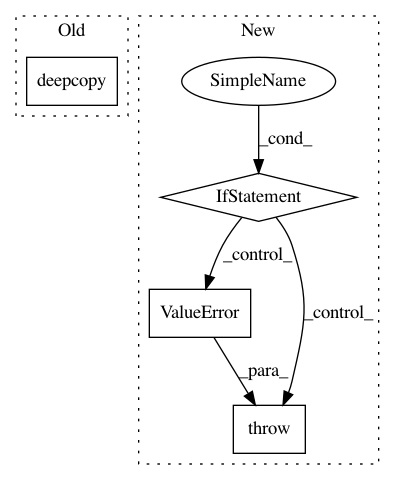

Before Change

if input_var is not None:

_input_var = deepcopy(input_var)

else:

_input_var = deepcopy(p.input_var)

if q is not None:

_input_var += deepcopy(q.input_var)After Change

>>> p = Generator()

>>> q = Inference()

>>> prior = Normal(loc=torch.tensor(0.), scale=torch.tensor(1.),

... var=["z"], features_shape=[64], name="p_{prior}")

...

>>> // Define a loss functio n (VAE)

>>> reconst = -p.log_prob().expectation(q)

>>> kl = KullbackLeibler(q,prior)

>>> loss_cls = (reconst - kl).mean()In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances Project Name: masa-su/pixyz

Commit Name: 161ae41bebc73c146627169f761e3c4ddf83e5d4

Time: 2020-10-26

Author: kaneko@weblab.t.u-tokyo.ac.jp

File Name: pixyz/losses/losses.py

Class Name: Divergence

Method Name: __init__

Project Name: tensorflow/models

Commit Name: e1799db4a196fc6bf50561c557d39bf1e989bf6a

Time: 2020-02-27

Author: yeqing@google.com

File Name: official/modeling/hyperparams/base_config.py

Class Name: Config

Method Name: __setattr__

Project Name: mynlp/ccg2lambda

Commit Name: e1efd3c7338564009712a8385db123b929be178d

Time: 2017-05-30

Author: pascual@nii.ac.jp

File Name: scripts/ccg2lambda_tools.py

Class Name:

Method Name: assign_semantics_to_ccg