297e14732849f2b8025de6e2bf80ac05cab82b44,src/gluonnlp/data/corpora/google_billion_word.py,GBWStream,__init__,#GBWStream#Any#Any#Any#Any#Any#,133

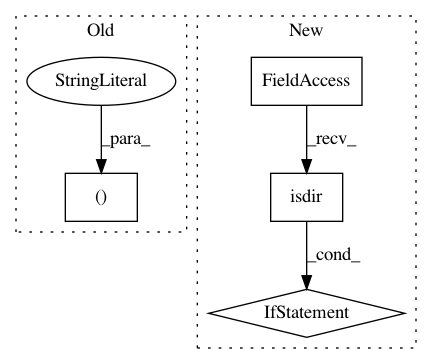

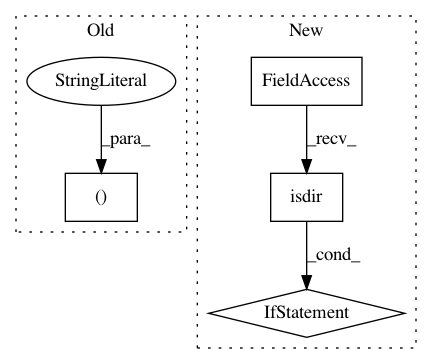

Before Change

self._data_file = {"train": ("training-monolingual.tokenized.shuffled",

"news.en-00*-of-00100",

"5e0d7050b37a99fd50ce7e07dc52468b2a9cd9e8"),

"test": ("heldout-monolingual.tokenized.shuffled",

"news.en.heldout-00000-of-00050",

"0a8e2b7496ba0b5c05158f282b9b351356875445")}

self._vocab_file = ("gbw-ebb1a287.vocab",

"ebb1a287ca14d8fa6f167c3a779e5e7ed63ac69f")

super(GBWStream, self).__init__("gbw", segment, bos, eos, skip_empty, root)

After Change

def __init__(self, segment="train", skip_empty=True, bos=None, eos=EOS_TOKEN,

root=os.path.join(get_home_dir(), "datasets", "gbw")):

root = os.path.expanduser(root)

if not os.path.isdir(root):

os.makedirs(root)

self._root = root

self._dir = os.path.join(root, "1-billion-word-language-modeling-benchmark-r13output")

self._namespace = "gluon/dataset/gbw"

subdir_name, pattern, data_hash = self._data_file[segment]

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 4

Instances

Project Name: dmlc/gluon-nlp

Commit Name: 297e14732849f2b8025de6e2bf80ac05cab82b44

Time: 2019-11-27

Author: lausen@amazon.com

File Name: src/gluonnlp/data/corpora/google_billion_word.py

Class Name: GBWStream

Method Name: __init__

Project Name: dPys/PyNets

Commit Name: 8eaf34c57abdec5e4dffa0838a82ac5f52715275

Time: 2019-10-12

Author: dpisner@utexas.edu

File Name: pynets/plotting/plot_gen.py

Class Name:

Method Name: plot_graph_measure_hists

Project Name: deepinsight/insightface

Commit Name: 8df5470cf8ce9f1a3b7e34fd6bd6b24be42523d8

Time: 2018-01-25

Author: guojia@gmail.com

File Name: src/data/dataset_merge.py

Class Name:

Method Name: main

Project Name: deepinsight/insightface

Commit Name: d92a6bcda0fce7a629563bac912d5fbc05ec2aa5

Time: 2018-01-25

Author: guojia@gmail.com

File Name: src/data/dataset_merge.py

Class Name:

Method Name: main