75eeb9825e331581839ad647acd15a40cd250c35,tensorforce/value_functions/deep_q_network.py,DeepQNetwork,create_training_operations,#DeepQNetwork#,95

Before Change

with tf.name_scope("predict"):

self.dqn_action = tf.argmax(self.training_network(self.state), dimension=1, name="dqn_action")

with tf.name_scope("target_values"):

self.target_values = tf.reduce_max(self.target_network(self.next_states), reduction_indices=1, name="target_values")

with tf.name_scope("training"):

self.q_targets = tf.placeholder("float32", [None], name="batch_q_targets")

self.batch_actions = tf.placeholder("int64", [None], name="batch_actions")

After Change

self.batch_next_states = tf.placeholder(tf.float32, None, name="next_states")

self.batch_actions = tf.placeholder(tf.int64, [None], name="batch_actions")

self.batch_terminals = tf.placeholder(tf.float32, [None], name="batch_terminals")

self.batch_rewards = tf.placeholder(tf.float32, [None], name="batch_rewards")

float_terminals = np.array(self.batch_terminals, dtype=float)

self.target_values = tf.reduce_max(self.target_network(self.batch_next_states), reduction_indices=1,

name="target_values")

q_targets = self.batch_rewards + (1. - float_terminals) * self.gamma * self.target_values

actions_one_hot = tf.one_hot(self.batch_actions, self.actions, 1.0, 0.0)

self.batch_q_values = tf.identity(self.training_network(self.batch_states), name="batch_q_values")

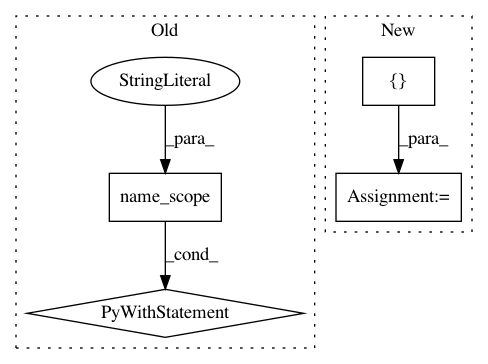

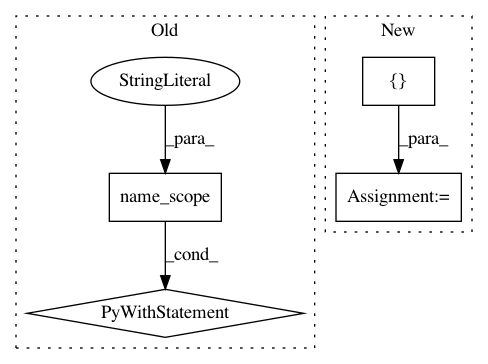

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: reinforceio/tensorforce

Commit Name: 75eeb9825e331581839ad647acd15a40cd250c35

Time: 2016-10-30

Author: mks40@cam.ac.uk

File Name: tensorforce/value_functions/deep_q_network.py

Class Name: DeepQNetwork

Method Name: create_training_operations

Project Name: rlworkgroup/garage

Commit Name: c4020b5d3ab637e1d6e8c2bcd96c955a8f1db45b

Time: 2019-04-17

Author: CatherineSue@users.noreply.github.com

File Name: garage/tf/algos/npo.py

Class Name: NPO

Method Name: _build_policy_loss

Project Name: MorvanZhou/tutorials

Commit Name: 780dcd9fd372afa8524a6515eec6a4c90b1494c9

Time: 2017-03-09

Author: morvanzhou@gmail.com

File Name: Reinforcement_learning_TUT/8_Actor_Critic_Advantage/AC_CartPole.py

Class Name: Actor

Method Name: __init__