0e3dc773bfe1cc74e3c72192c5dc6fbf63864d08,train.py,,,#,132

Before Change

your_images_path = config.DATA.your_images_path

your_annos_path = config.DATA.your_annos_path

your_data = PoseInfo(your_images_path, your_annos_path, False)

your_imgs_file_list = your_data.get_image_list()

your_objs_info_list = your_data.get_joint_list()

your_mask_list = your_data.get_mask()

if len(your_imgs_file_list) != len(your_objs_info_list):

raise Exception("number of customized images and annotations do not match")

else:

print("number of customized images {}".format(len(your_imgs_file_list)))

// choice dataset for training

// 1. only coco training set

// imgs_file_list = train_imgs_file_list

// train_targets = list(zip(train_objs_info_list, train_mask_list))

// 2. your customized data from "data/your_data" and coco training set

imgs_file_list = train_imgs_file_list + your_imgs_file_list

train_targets = list(zip(train_objs_info_list + your_objs_info_list, train_mask_list + your_mask_list))

// define data augmentation

def generator():

TF Dataset generartor.

assert len(imgs_file_list) == len(train_targets)

for _input, _target in zip(imgs_file_list, train_targets):

yield _input.encode("utf-8"), cPickle.dumps(_target)

dataset = tf.data.Dataset().from_generator(generator, output_types=(tf.string, tf.string))

dataset = dataset.map(_map_fn, num_parallel_calls=8)

dataset = dataset.shuffle(buffer_size=2046)

dataset = dataset.repeat(n_epoch)

dataset = dataset.batch(batch_size)

dataset = dataset.prefetch(buffer_size=20)

iterator = dataset.make_one_shot_iterator()

one_element = iterator.get_next()

if config.TRAIN.train_mode == "placeholder":

//// Train with placeholder can help your to check the data easily.

//// define model architecture

x = tf.placeholder(tf.float32, [None, hin, win, 3], "image")

confs = tf.placeholder(tf.float32, [None, hout, wout, n_pos], "confidence_maps")

pafs = tf.placeholder(tf.float32, [None, hout, wout, n_pos * 2], "pafs")

// if the people does not have keypoints annotations, ignore the area

img_mask1 = tf.placeholder(tf.float32, [None, hout, wout, n_pos], "img_mask1")

img_mask2 = tf.placeholder(tf.float32, [None, hout, wout, n_pos * 2], "img_mask2")

num_images = np.shape(imgs_file_list)[0]

cnn, b1_list, b2_list, net = model(x, n_pos, img_mask1, img_mask2, True, False)

//// define loss

After Change

//// 2. if you have a folder with many folders: (which is common in industry)

folder_list = tl.files.load_folder_list(path="your_data")

your_imgs_file_list, your_objs_info_list, your_mask_list = [], [], []

for folder in folder_list:

_imgs_file_list, _objs_info_list, _mask_list, _targets = \

get_pose_data_list(os.path.join(folder, "images"), os.path.join(folder, "coco.json"))

print(len(_imgs_file_list))

your_imgs_file_list.extend(_imgs_file_list)

your_objs_info_list.extend(your_objs_info_list)

your_mask_list.extend(your_mask_list)

print("number of customized images found:", len(your_imgs_file_list))

exit()

//// choice dataset for training

//// 1. only coco training set

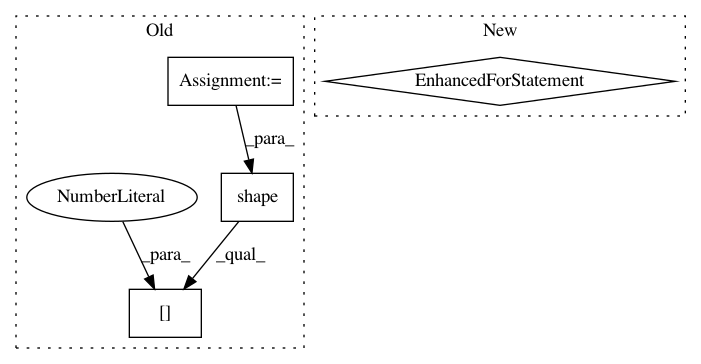

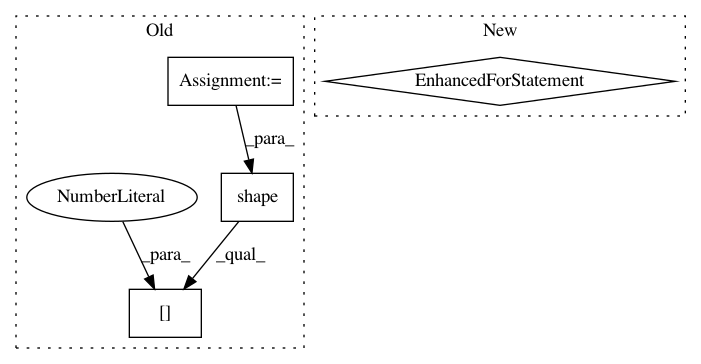

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: tensorlayer/openpose-plus

Commit Name: 0e3dc773bfe1cc74e3c72192c5dc6fbf63864d08

Time: 2018-08-28

Author: dhsig552@163.com

File Name: train.py

Class Name:

Method Name:

Project Name: tensorflow/ranking

Commit Name: 1271e900113a2730b8fec9db1e750b26db4b6af9

Time: 2019-09-18

Author: xuanhui@google.com

File Name: tensorflow_ranking/python/model.py

Class Name: _GroupwiseRankingModel

Method Name: _compute_logits_impl

Project Name: epfl-lts2/pygsp

Commit Name: a3e0d7eeb19be28d721b40746aea962c87e234a0

Time: 2015-01-27

Author: basile.chatillon@epfl.ch

File Name: pygsp/graphs.py

Class Name: NNGraph

Method Name: __init__