b87368e1e7fd832b505db9cc08015ac7af8f95de,VAE/main.py,,train,#Any#,94

Before Change

loss = loss.data[0]

optimizer.step()

if i % 10 == 0:

print("Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.4f}".format(

epoch,

i + BATCH_SIZE, training_data.size(0),

float(i + BATCH_SIZE) / training_data.size(0) * 100,

loss / BATCH_SIZE))

def test(epoch):

After Change

model.train()

train_loss = 0

for batch in train_loader:

batch = Variable(batch)

optimizer.zero_grad()

recon_batch, mu, logvar = model(batch)

loss = loss_function(recon_batch, batch, mu, logvar)

loss.backward()

train_loss += loss

optimizer.step()

print("====> Epoch: {} Loss: {:.4f}".format(

epoch,

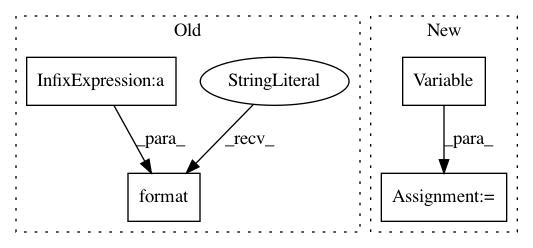

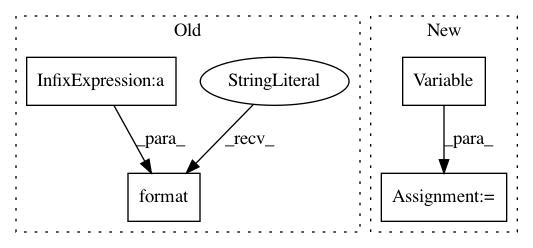

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: pytorch/examples

Commit Name: b87368e1e7fd832b505db9cc08015ac7af8f95de

Time: 2016-12-23

Author: jvanamersfoort@twitter.com

File Name: VAE/main.py

Class Name:

Method Name: train

Project Name: pyprob/pyprob

Commit Name: a8c9e491db1edde70c36fa8ae4a17aa3a4eaf49a

Time: 2017-10-09

Author: atilimgunes.baydin@gmail.com

File Name: pyprob/util.py

Class Name:

Method Name: