fbec92e2363493126b4927a167039fbd037f17bc,code/deep/DAN/DAN.py,,test,#Any#,90

Before Change

if cuda:

data, target = data.cuda(), target.cuda()

data, target = Variable(data, volatile=True), Variable(target)

s_output, t_output = model(data, data)

test_loss += F.nll_loss(F.log_softmax(s_output, dim = 1), target, size_average=False).data[0] // sum up batch loss

pred = s_output.data.max(1)[1] // get the index of the max log-probability

correct += pred.eq(target.data.view_as(pred)).cpu().sum()

After Change

test_loss = 0

correct = 0

with torch.no_grad():

for tgt_test_data, tgt_test_label in tgt_test_loader:

if cuda:

tgt_test_data, tgt_test_label = tgt_test_data.cuda(), tgt_test_label.cuda()

tgt_test_data, tgt_test_label = Variable(tgt_test_data), Variable(tgt_test_label)

tgt_pred, mmd_loss = model(tgt_test_data, tgt_test_data)

test_loss += F.nll_loss(F.log_softmax(tgt_pred, dim = 1), tgt_test_label, reduction="sum").item() // sum up batch loss

pred = tgt_pred.data.max(1)[1] // get the index of the max log-probability

correct += pred.eq(tgt_test_label.data.view_as(pred)).cpu().sum()

test_loss /= tgt_dataset_len

print("\n{} set: Average loss: {:.4f}, Accuracy: {}/{} ({:.2f}%)\n".format(

tgt_name, test_loss, correct, tgt_dataset_len,

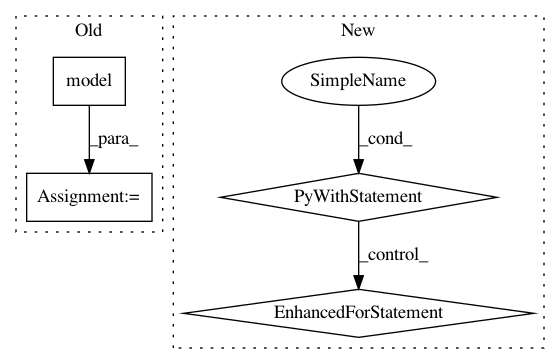

100. * correct / tgt_dataset_len))In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances Project Name: jindongwang/transferlearning

Commit Name: fbec92e2363493126b4927a167039fbd037f17bc

Time: 2019-10-21

Author: 1299192934@qq.com

File Name: code/deep/DAN/DAN.py

Class Name:

Method Name: test

Project Name: tensorflow/tpu

Commit Name: 4be0f638ed7b73dbf0777320df374e91cce79144

Time: 2018-04-14

Author: huangyp@google.com

File Name: models/official/retinanet/retinanet_model.py

Class Name:

Method Name: _model_fn

Project Name: pytorch/tutorials

Commit Name: 0ad33d606682537466f3430fc6d6ac7d47460f1a

Time: 2018-04-24

Author: soumith@gmail.com

File Name: beginner_source/nlp/deep_learning_tutorial.py

Class Name:

Method Name: