d1f352a71ba7056a4dc3adb6698e0e792d78b0b2,models/AttEnsemble.py,AttEnsemble,_forward,#AttEnsemble#Any#Any#Any#Any#,54

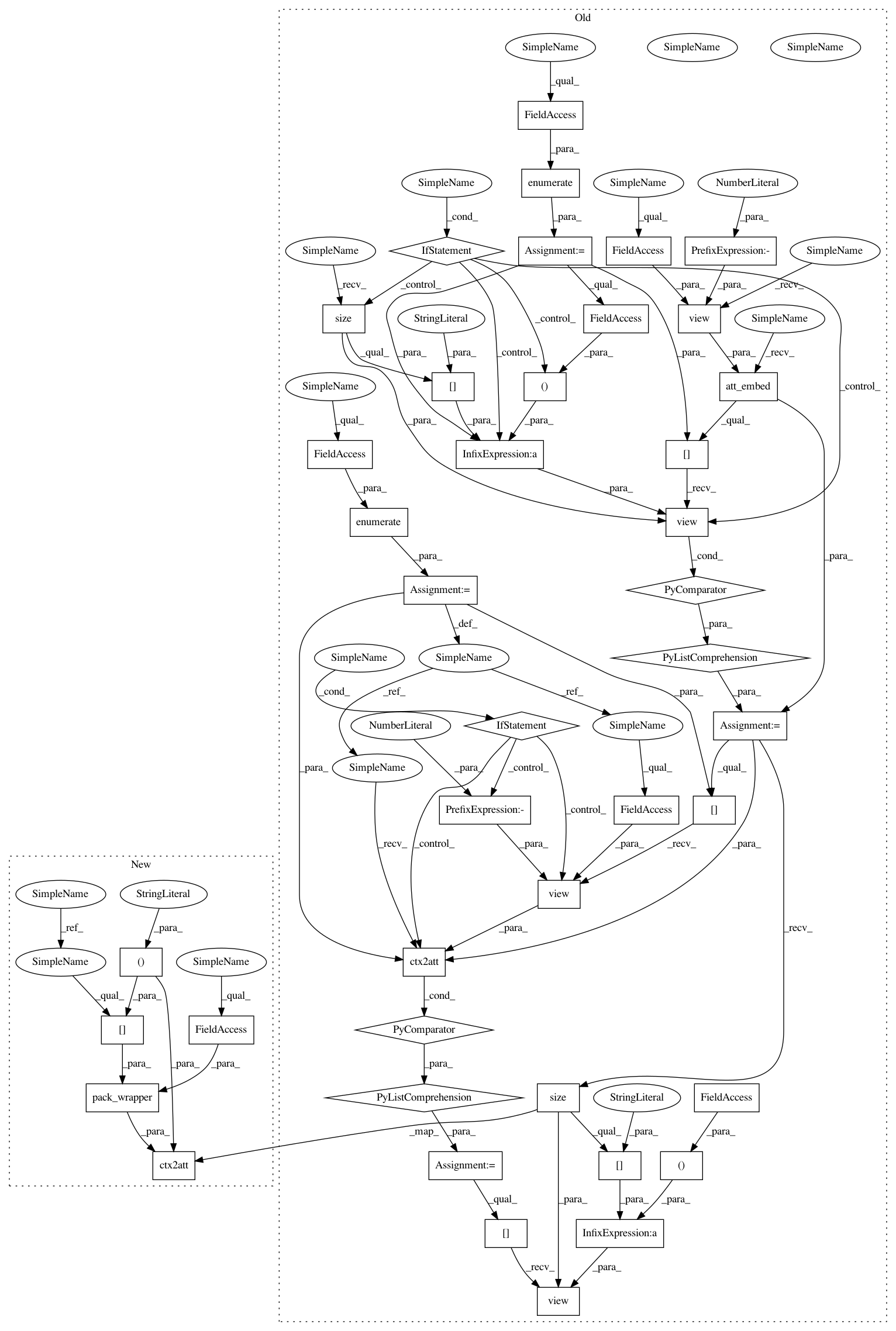

Before Change

// embed fc and att feats

fc_feats = [m.fc_embed(fc_feats) for m in self.models]

_att_feats = [m.att_embed(att_feats.view(-1, m.att_feat_size)) for m in self.models]

att_feats = [_att_feats[i].view(*(att_feats.size()[:-1] + (m.rnn_size,))) for i,m in enumerate(self.models)]

// Project the attention feats first to reduce memory and computation comsumptions.

p_att_feats = [m.ctx2att(att_feats[i].view(-1, m.rnn_size)) for i, m in enumerate(self.models)]

p_att_feats = [p_att_feats[i].view(*(att_feats[i].size()[:-1] + (m.att_hid_size,))) for i,m in enumerate(self.models)]

for i in range(seq.size(1) - 1):

if self.training and i >= 1 and self.ss_prob > 0.0: // otherwiste no need to sampleAfter Change

// embed fc and att feats

fc_feats = [m.fc_embed(fc_feats) for m in self.models]

att_feats = [pack_wrapper(m.att_embed, att_feats[...,:m.att_feat_size], att_masks) for m in self.models]

// Project the attention feats first to reduce memory and computation comsumptions.

p_att_feats = [m.ctx2att(att_feats[i]) for i,m in enumerate(self.models)]

for i in range(seq.size(1) - 1):

if self.training and i >= 1 and self.ss_prob > 0.0: // otherwiste no need to sampleIn pattern: SUPERPATTERN

Frequency: 4

Non-data size: 42

Instances Project Name: ruotianluo/self-critical.pytorch

Commit Name: d1f352a71ba7056a4dc3adb6698e0e792d78b0b2

Time: 2017-11-13

Author: rluo@ttic.edu

File Name: models/AttEnsemble.py

Class Name: AttEnsemble

Method Name: _forward

Project Name: ruotianluo/ImageCaptioning.pytorch

Commit Name: d1f352a71ba7056a4dc3adb6698e0e792d78b0b2

Time: 2017-11-13

Author: rluo@ttic.edu

File Name: models/AttEnsemble.py

Class Name: AttEnsemble

Method Name: _forward

Project Name: ruotianluo/ImageCaptioning.pytorch

Commit Name: d1f352a71ba7056a4dc3adb6698e0e792d78b0b2

Time: 2017-11-13

Author: rluo@ttic.edu

File Name: models/AttEnsemble.py

Class Name: AttEnsemble

Method Name: _sample