79fc09d66d3f4736b9fb4f5756a78725719f3808,train.py,,train,#Any#,27

Before Change

optimizer = optim.Adam(model.parameters(), lr=opt.learning_rate)

// Load the optimizer

if infos.get("state_dict", None):

optimizer.load_state_dict(infos["state_dict"])

while True:

if update_lr_flag:

// Assign the learning rate

if epoch > opt.learning_rate_decay_start and opt.learning_rate_decay_start >= 0:

After Change

optimizer = optim.Adam(model.parameters(), lr=opt.learning_rate)

// Load the optimizer

if vars(opt).get("start_from", None) is not None:

optimizer.load_state_dict(torch.load(os.path.join(opt.start_from, "optimizer.pth")))

while True:

if update_lr_flag:

// Assign the learning rate

if epoch > opt.learning_rate_decay_start and opt.learning_rate_decay_start >= 0:

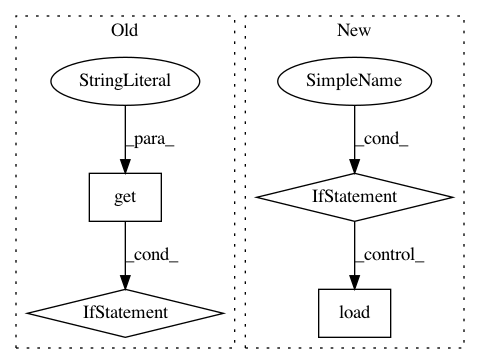

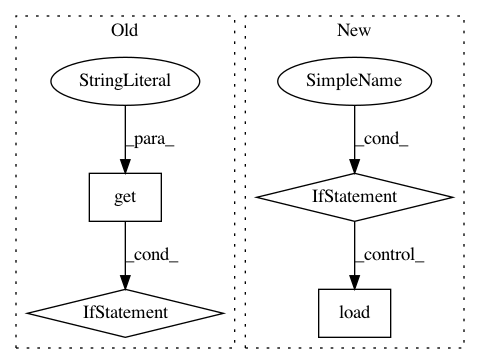

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: ruotianluo/self-critical.pytorch

Commit Name: 79fc09d66d3f4736b9fb4f5756a78725719f3808

Time: 2017-02-10

Author: rluo@ttic.edu

File Name: train.py

Class Name:

Method Name: train

Project Name: arnomoonens/yarll

Commit Name: b2f4c901679bd175a53ab35ffa9de1514584a03f

Time: 2018-03-15

Author: arno.moonens@gmail.com

File Name: environment/registration.py

Class Name:

Method Name: make