4431e04b482238eee5d581eb2c9ca6789ac0ff12,layers/attention.py,AttentionRNNCell,forward,#AttentionRNNCell#Any#Any#Any#Any#Any#Any#Any#,136

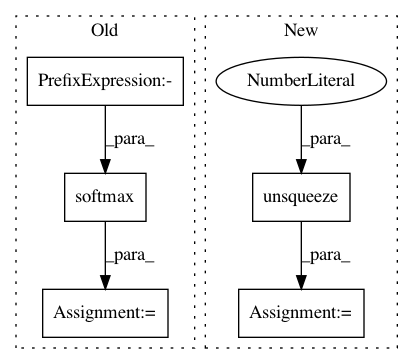

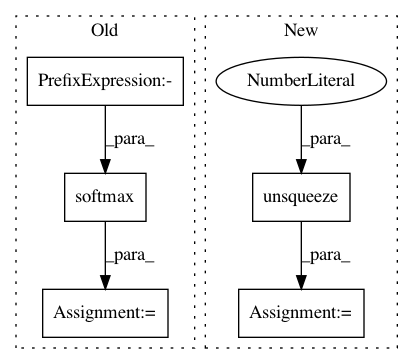

Before Change

// Update the window

self.win_idx = torch.argmax(alignment,1).long()[0].item()

// Normalize context weight

alignment = F.softmax(alignment, dim=-1)

// alignment = 5 * alignment

// alignment = torch.sigmoid(alignment) / torch.sigmoid(alignment).sum(dim=1).unsqueeze(1)

// Attention context vector

// (batch, 1, dim)

After Change

// Normalize context weight

// alignment = F.softmax(alignment, dim=-1)

// alignment = 5 * alignment

alignment = torch.sigmoid(alignment) / torch.sigmoid(alignment).sum(dim=1).unsqueeze(1)

// Attention context vector

// (batch, 1, dim)

// c_i = \sum_{j=1}^{T_x} \alpha_{ij} h_j

context = torch.bmm(alignment.unsqueeze(1), annots)

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances

Project Name: mozilla/TTS

Commit Name: 4431e04b482238eee5d581eb2c9ca6789ac0ff12

Time: 2019-01-16

Author: egolge@mozilla.com

File Name: layers/attention.py

Class Name: AttentionRNNCell

Method Name: forward

Project Name: jadore801120/attention-is-all-you-need-pytorch

Commit Name: e2c95f83907f4ee480eef074822471b4844f498c

Time: 2019-11-27

Author: jadore801120@gmail.com

File Name: transformer/Modules.py

Class Name: ScaledDotProductAttention

Method Name: forward

Project Name: mozilla/TTS

Commit Name: f2ef1ca36afd4eb74bd1ad36a7a84411f69e5435

Time: 2018-09-19

Author: erengolge@gmail.com

File Name: layers/attention.py

Class Name: AttentionRNNCell

Method Name: forward