6cd0cd3bd99ec2df47bb571a18e0b70819134504,rllib/agents/impala/impala.py,OverrideDefaultResourceRequest,default_resource_request,#Any#Any#,101

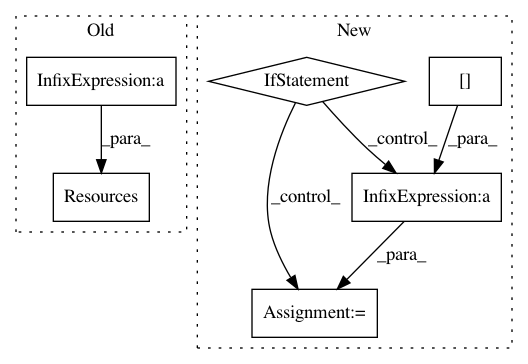

Before Change

def default_resource_request(cls, config):

cf = dict(cls._default_config, **config)

Trainer._validate_config(cf)

return Resources(

cpu=cf["num_cpus_for_driver"],

gpu=cf["num_gpus"],

memory=cf["memory"],

object_store_memory=cf["object_store_memory"],

extra_cpu=cf["num_cpus_per_worker"] * cf["num_workers"] +

cf["num_aggregation_workers"],

extra_gpu=cf["num_gpus_per_worker"] * cf["num_workers"],

extra_memory=cf["memory_per_worker"] * cf["num_workers"],

extra_object_store_memory=cf["object_store_memory_per_worker"] *

cf["num_workers"])

def make_learner_thread(local_worker, config):

if config["num_gpus"] > 1 or config["num_data_loader_buffers"] > 1:

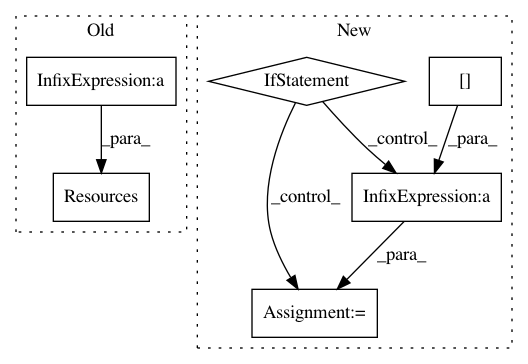

After Change

"CPU": cf["num_cpus_per_worker"],

"GPU": cf["num_gpus_per_worker"],

} for _ in range(cf["num_workers"])

] + ([

{

// Evaluation workers (+1 b/c of the additional local

// worker)

"CPU": eval_config.get("num_cpus_per_worker",

cf["num_cpus_per_worker"]),

"GPU": eval_config.get("num_gpus_per_worker",

cf["num_gpus_per_worker"]),

} for _ in range(cf["evaluation_num_workers"] + 1)

] if cf["evaluation_interval"] else []),

strategy=config.get("placement_strategy", "PACK"))

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 6

Instances

Project Name: ray-project/ray

Commit Name: 6cd0cd3bd99ec2df47bb571a18e0b70819134504

Time: 2021-02-25

Author: sven@anyscale.io

File Name: rllib/agents/impala/impala.py

Class Name: OverrideDefaultResourceRequest

Method Name: default_resource_request

Project Name: ray-project/ray

Commit Name: ef944bc5f0d7764cd99d50500e470eac005a3d01

Time: 2021-03-04

Author: sven@anyscale.io

File Name: rllib/agents/impala/impala.py

Class Name: OverrideDefaultResourceRequest

Method Name: default_resource_request

Project Name: bokeh/bokeh

Commit Name: 45ebd1c99074327d5aace6552e38b142e8eb477b

Time: 2017-08-09

Author: bryanv@continuum.io

File Name: bokeh/sphinxext/bokeh_plot.py

Class Name:

Method Name: