30efaaa572d798212c926e5b2edbf2b0fe7fa2f1,opennmt/decoders/rnn_decoder.py,AttentionalRNNDecoder,_get_initial_state,#AttentionalRNNDecoder#Any#Any#Any#,117

Before Change

if tf.executing_eagerly():

raise RuntimeError("Attention-based RNN decoder are currently not compatible "

"with eager execution")

initial_cell_state = super(AttentionalRNNDecoder, self)._get_initial_state(

batch_size, dtype, initial_state=initial_state)

attention_mechanism = self.attention_mechanism_class(

self.cell.output_size,

self.memory,

memory_sequence_length=self.memory_sequence_length,

After Change

self.memory,

memory_sequence_length=self.memory_sequence_length)

decoder_state = self.cell.get_initial_state(batch_size=batch_size, dtype=dtype)

if initial_state is not None:

if self.first_layer_attention:

cell_state = list(decoder_state)

cell_state[0] = decoder_state[0].cell_state

cell_state = self.bridge(initial_state, cell_state)

cell_state[0] = decoder_state[0].clone(cell_state=cell_state[0])

decoder_state = tuple(cell_state)

else:

cell_state = self.bridge(initial_state, decoder_state.cell_state)

decoder_state = decoder_state.clone(cell_state=cell_state)

return decoder_state

def step(self,

inputs,

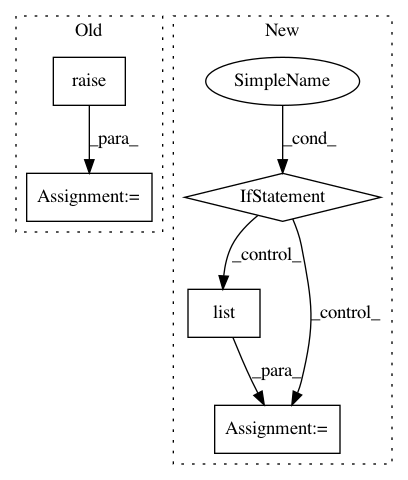

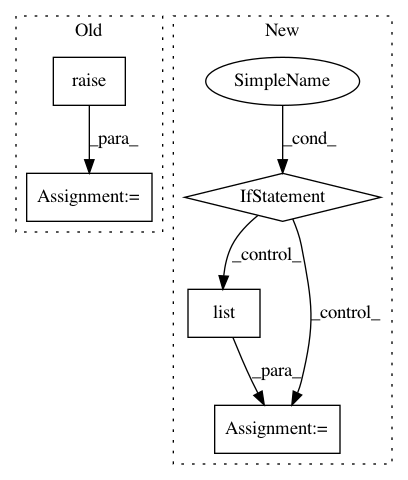

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances

Project Name: OpenNMT/OpenNMT-tf

Commit Name: 30efaaa572d798212c926e5b2edbf2b0fe7fa2f1

Time: 2019-07-15

Author: guillaume.klein@systrangroup.com

File Name: opennmt/decoders/rnn_decoder.py

Class Name: AttentionalRNNDecoder

Method Name: _get_initial_state

Project Name: PetrochukM/PyTorch-NLP

Commit Name: 2a1a6851344172e0134f3c5f4f5c1021975f2812

Time: 2018-03-11

Author: petrochukm@gmail.com

File Name: torchnlp/samplers/bucket_batch_sampler.py

Class Name: BucketBatchSampler

Method Name: __iter__

Project Name: jhfjhfj1/autokeras

Commit Name: a6819ac67444b66143ab8e0cad8a42cb7635730d

Time: 2020-07-17

Author: haifengj@google.com

File Name: autokeras/blocks/basic.py

Class Name: ResNetBlock

Method Name: build