cfeef8cb854a24371f0ef5d7d9c68f23675c97ee,a2_decoder.py,,init,#,91

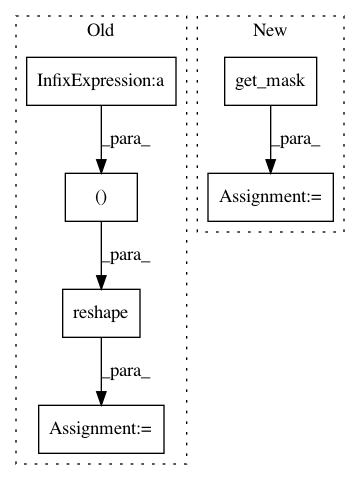

Before Change

Embedding = tf.get_variable("Embedding_d", shape=[vocab_size, embed_size], initializer=initializer)

decoder_input_x = tf.placeholder(tf.int32, [batch_size, decoder_sent_length], name="input_x") // [4,10]

print("1.decoder_input_x:", decoder_input_x)

decoder_input_x_ = tf.reshape(decoder_input_x, (batch_size * decoder_sent_length,)) // [batch_size*sequence_length]

embedded_words = tf.nn.embedding_lookup(Embedding, decoder_input_x_) // [batch_size*sequence_length,embed_size]

Q = embedded_words // [batch_size*sequence_length,embed_size]

K_s = embedded_words // [batch_size*sequence_length,embed_size]

K_v_encoder = tf.placeholder(tf.float32, [batch_size * sequence_length, d_model], name="input_x") //sequence_length

print("2.output from encoder:",K_v_encoder)

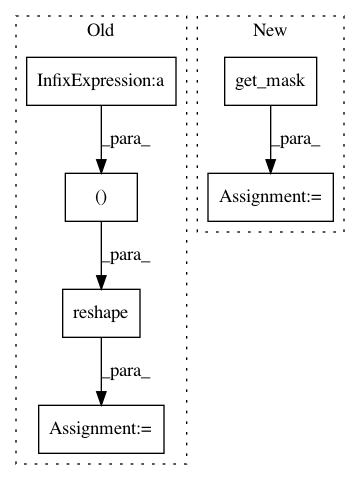

After Change

K_s=decoder_input_embedding

K_v_encoder= tf.get_variable("v_variable",shape=[batch_size,decoder_sent_length, d_model],initializer=initializer) //tf.float32,

print("2.output from encoder:",K_v_encoder)

mask = get_mask(decoder_sent_length) //sequence_length

decoder = Decoder(d_model, d_k, d_v, sequence_length, h, batch_size, Q, K_s, K_v_encoder,decoder_sent_length,mask=mask,num_layer=num_layer)

return decoder,Q, K_s

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 6

Instances

Project Name: brightmart/text_classification

Commit Name: cfeef8cb854a24371f0ef5d7d9c68f23675c97ee

Time: 2017-07-06

Author: brightmart@hotmail.com

File Name: a2_decoder.py

Class Name:

Method Name: init

Project Name: brightmart/text_classification

Commit Name: cfeef8cb854a24371f0ef5d7d9c68f23675c97ee

Time: 2017-07-06

Author: brightmart@hotmail.com

File Name: a2_encoder.py

Class Name:

Method Name: init

Project Name: brightmart/text_classification

Commit Name: cfeef8cb854a24371f0ef5d7d9c68f23675c97ee

Time: 2017-07-06

Author: brightmart@hotmail.com

File Name: a2_multi_head_attention.py

Class Name:

Method Name: multi_head_attention_for_sentence_vectorized