19ad2d60a7022bb5125855c029f27d86aaa46d64,rl_coach/filters/reward/reward_normalization_filter.py,RewardNormalizationFilter,filter,#RewardNormalizationFilter#Any#Any#,65

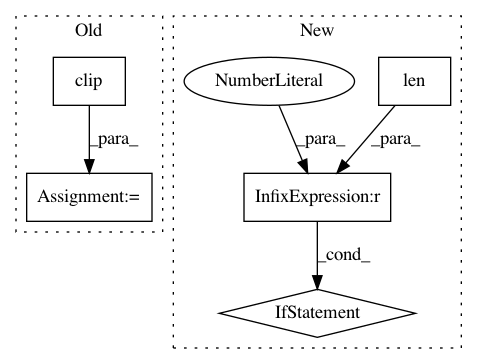

Before Change

reward = (reward - self.running_rewards_stats.mean) / \

(self.running_rewards_stats.std + 1e-15)

reward = np.clip(reward, self.clip_min, self.clip_max)

return reward

def get_filtered_reward_space(self, input_reward_space: RewardSpace) -> RewardSpace:

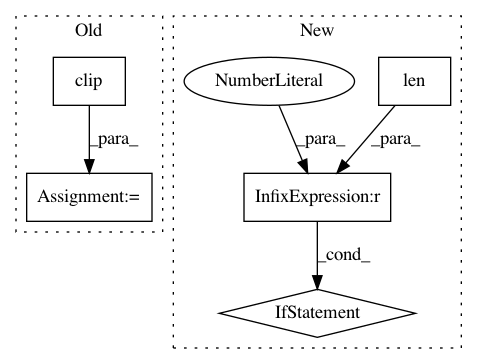

After Change

def filter(self, reward: RewardType, update_internal_state: bool=True) -> RewardType:

if update_internal_state:

if not isinstance(reward, np.ndarray) or len(reward.shape) < 2:

reward = np.array([[reward]])

self.running_rewards_stats.push(reward)

return self.running_rewards_stats.normalize(reward).squeeze()

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances

Project Name: NervanaSystems/coach

Commit Name: 19ad2d60a7022bb5125855c029f27d86aaa46d64

Time: 2019-07-14

Author: gal.leibovich@intel.com

File Name: rl_coach/filters/reward/reward_normalization_filter.py

Class Name: RewardNormalizationFilter

Method Name: filter

Project Name: IBM/adversarial-robustness-toolbox

Commit Name: fa961f2290c3144f1c5a5c9b8a484610ab835032

Time: 2019-05-14

Author: Maria-Irina.Nicolae@ibm.com

File Name: art/defences/spatial_smoothing.py

Class Name: SpatialSmoothing

Method Name: __call__

Project Name: nipy/dipy

Commit Name: 81f6a059662f2ff2f7c214f36cada3a7cb920da1

Time: 2013-12-10

Author: mrbago@gmail.com

File Name: dipy/reconst/peaks.py

Class Name:

Method Name: peak_directions