4287aef6a3a82436b4e3e156b22ede235eb4e6ba,texar/modules/decoders/transformer_decoders.py,TransformerDecoder,__init__,#TransformerDecoder#Any#Any#Any#,28

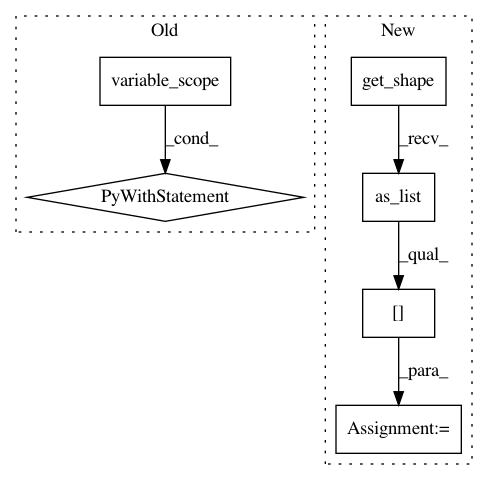

Before Change

ModuleBase.__init__(self, hparams)

self._vocab_size = vocab_size

self._embedding = None

with tf.variable_scope(self.variable_scope):

if self._hparams.embedding_enabled:

if embedding is None and vocab_size == None:

raise ValueError("If "embedding" is not provided, "

""vocab_size" must be specified.")

if isinstance(embedding, tf.Variable):

self._embedding = embedding

else:

self._embedding = layers.get_embedding(

self._hparams.embedding, embedding, vocab_size,

variable_scope="dec_embed")

embed_dim = self._embedding.shape.as_list()[-1]

if self._hparams.zero_pad:

self._embedding = tf.concat((tf.zeros(shape=[1, embed_dim]),

self._embedding[1:, :]), 0)

if self._hparams.embedding.trainable:

self._add_trainable_variable(self._embedding)

@staticmethod

def default_hparams():

return {

"embedding_enabled": True,

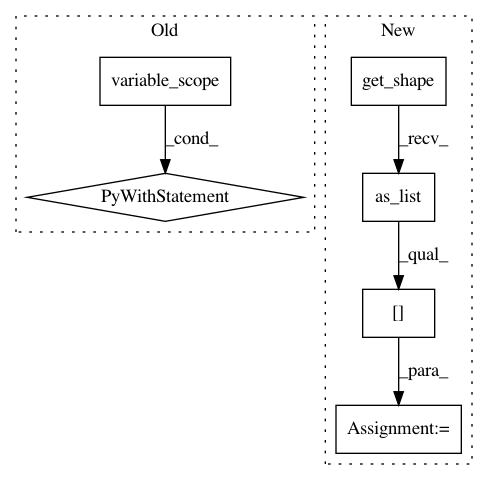

After Change

if self._hparams.embedding.trainable:

self._add_trainable_variable(self._embedding)

if self._vocab_size is None:

self._vocab_size = self._embedding.get_shape().as_list()[0]

@staticmethod

def default_hparams():

return {

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 6

Instances

Project Name: asyml/texar

Commit Name: 4287aef6a3a82436b4e3e156b22ede235eb4e6ba

Time: 2017-12-11

Author: shore@pku.edu.cn

File Name: texar/modules/decoders/transformer_decoders.py

Class Name: TransformerDecoder

Method Name: __init__

Project Name: haotianteng/Chiron

Commit Name: a9b67d7ccc6357ffd07cb76600bc651e4bcac14b

Time: 2018-09-01

Author: havens.teng@gmail.com

File Name: chiron/cnn.py

Class Name:

Method Name: RNA_model2

Project Name: asyml/texar

Commit Name: 269e11a506f1dfa80d95f27b3e2d3df845c80ae3

Time: 2017-12-09

Author: zhiting.hu@petuum.com

File Name: texar/modules/encoders/transformer_encoders.py

Class Name: TransformerEncoder

Method Name: __init__