428516056abe41f135133e732a8d44af6ce9a234,rllib/policy/torch_policy.py,TorchPolicy,learn_on_batch,#TorchPolicy#Any#,221

Before Change

train_batch = self._lazy_tensor_dict(postprocessed_batch)

loss_out = self._loss(self, self.model, self.dist_class, train_batch)

self._optimizer.zero_grad()

loss_out.backward()

info = {}

info.update(self.extra_grad_process())

After Change

torch.distributed.all_reduce_coalesced(

grads, op=torch.distributed.ReduceOp.SUM)

for param_group in opt.param_groups:

for p in param_group["params"]:

if p.grad is not None:

p.grad /= self.distributed_world_size

grad_info["allreduce_latency"] += time.time() - start

// Step the optimizer.

opt.step()

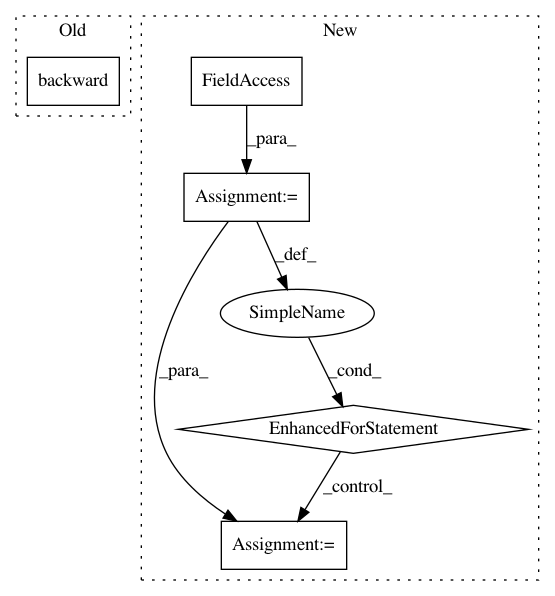

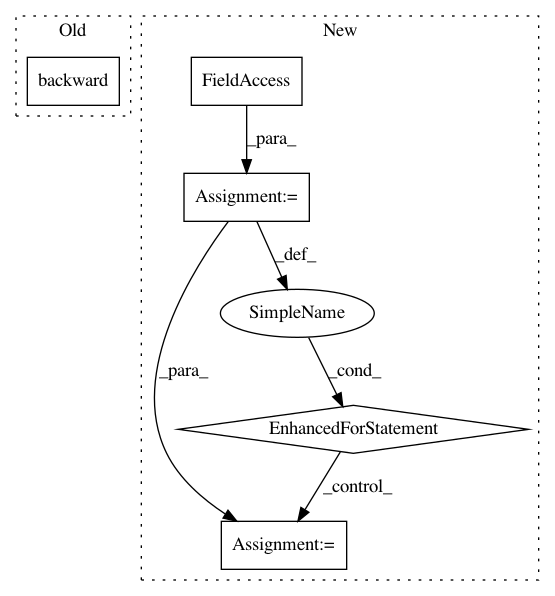

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances

Project Name: ray-project/ray

Commit Name: 428516056abe41f135133e732a8d44af6ce9a234

Time: 2020-04-15

Author: sven@anyscale.io

File Name: rllib/policy/torch_policy.py

Class Name: TorchPolicy

Method Name: learn_on_batch

Project Name: HyperGAN/HyperGAN

Commit Name: 6e7bc01007c8d762096a61ec39cd5334feabe0e5

Time: 2020-02-14

Author: mikkel@255bits.com

File Name: hypergan/trainers/simultaneous_trainer.py

Class Name: SimultaneousTrainer

Method Name: _step

Project Name: ray-project/ray

Commit Name: 428516056abe41f135133e732a8d44af6ce9a234

Time: 2020-04-15

Author: sven@anyscale.io

File Name: rllib/policy/torch_policy.py

Class Name: TorchPolicy

Method Name: compute_gradients