303217b34070dc47a86622b62764098999b0d7f5,gpytorch/lazy/lazy_tensor.py,LazyTensor,_quad_form_derivative,#LazyTensor#Any#Any#,378

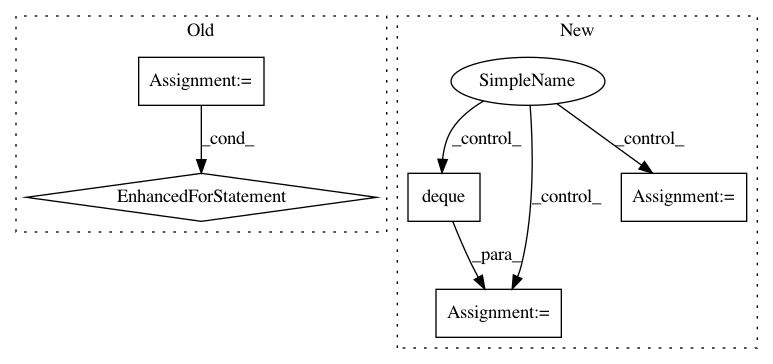

Before Change

toggled = [False] * len(args)

for i, arg in enumerate(args):

if not arg.requires_grad:

arg.requires_grad = True

toggled[i] = True

loss = (left_vecs * self._matmul(right_vecs)).sum()

loss.requires_grad_(True)

grads = torch.autograd.grad(loss, args, allow_unused=True)

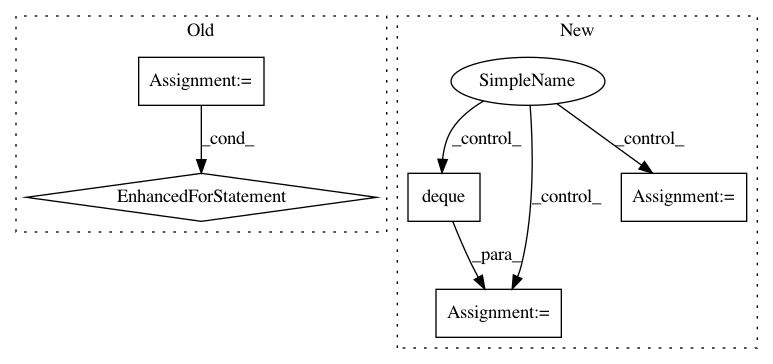

After Change

with torch.autograd.enable_grad():

loss = (left_vecs * self._matmul(right_vecs)).sum()

loss.requires_grad_(True)

actual_grads = deque(torch.autograd.grad(loss, args_with_grads, allow_unused=True))

// Now make sure that the object we return has one entry for every item in args

grads = []

for arg in args:

if arg.requires_grad:

grads.append(actual_grads.popleft())

else:

grads.append(None)

args_with_grads = tuple(arg for arg in args if arg.requires_grad)

return grads

def _preconditioner(self):

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances

Project Name: cornellius-gp/gpytorch

Commit Name: 303217b34070dc47a86622b62764098999b0d7f5

Time: 2018-12-12

Author: gpleiss@gmail.com

File Name: gpytorch/lazy/lazy_tensor.py

Class Name: LazyTensor

Method Name: _quad_form_derivative

Project Name: deepgram/kur

Commit Name: b2d8ee42f12c7d033bdeb929c9f38b3c90e48582

Time: 2017-03-23

Author: ajsyp@syptech.net

File Name: kur/kurfile.py

Class Name: Kurfile

Method Name: _parse_model

Project Name: GoogleCloudPlatform/PerfKitBenchmarker

Commit Name: c4289c6035f95f6e3964273ebf43dd1788a25a9a

Time: 2016-04-20

Author: skschneider@users.noreply.github.com

File Name: perfkitbenchmarker/requirements.py

Class Name:

Method Name: _CheckRequirements