6cb84f4b742861e823015cac18535efa468b0d81,autokeras/layer_transformer.py,,conv_dense_to_wider_layer,#Any#Any#Any#,128

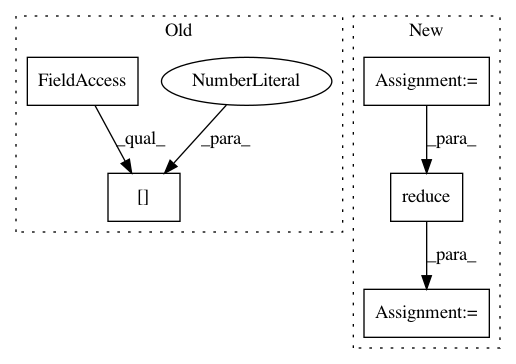

Before Change

pre_filter_shape = pre_layer.kernel_size

conv_func = get_conv_layer_func(len(pre_filter_shape))

n_pre_filters = pre_layer.filters

n_units = next_layer.get_weights().shape[1]

teacher_w1 = pre_layer.get_weights()[0]

teacher_w2 = next_layer.get_weights()[0]

n_total_weights = reduce(mul, teacher_w2.shape)

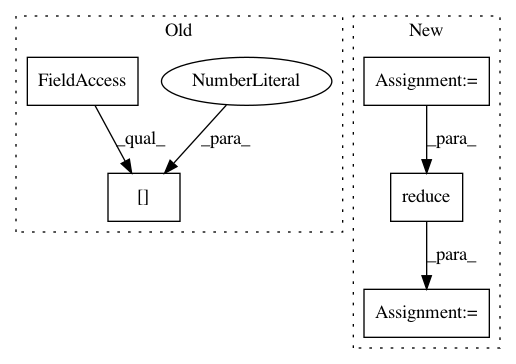

After Change

pre_filter_shape = pre_layer.kernel_size

conv_func = get_conv_layer_func(len(pre_filter_shape))

n_pre_filters = pre_layer.filters

n_units = next_layer.units

teacher_w1 = pre_layer.get_weights()[0]

teacher_w2 = next_layer.get_weights()[0]

n_total_weights = int(reduce(mul, teacher_w2.shape))

teacher_w2 = teacher_w2.reshape(int(n_total_weights / n_pre_filters / n_units), n_pre_filters, n_units)

teacher_b1 = pre_layer.get_weights()[1]

teacher_b2 = next_layer.get_weights()[1]

rand = np.random.randint(n_pre_filters, size=n_add_filters)

replication_factor = np.bincount(rand)

student_w1 = teacher_w1.copy()

student_w2 = teacher_w2.copy()

student_b1 = teacher_b1.copy()

// target layer update (i)

for i in range(len(rand)):

teacher_index = rand[i]

new_weight = teacher_w1[:, :, :, teacher_index]

new_weight = new_weight[:, :, :, np.newaxis]

student_w1 = np.concatenate((student_w1, new_weight), axis=3)

student_b1 = np.append(student_b1, teacher_b1[teacher_index])

// next layer update (i+1)

for i in range(len(rand)):

teacher_index = rand[i]

factor = replication_factor[teacher_index] + 1

new_weight = teacher_w2[:, teacher_index, :] * (1. / factor)

new_weight_re = new_weight[:, np.newaxis, :]

student_w2 = np.concatenate((student_w2, new_weight_re), axis=1)

student_w2[:, teacher_index, :] = new_weight

new_pre_layer = conv_func(n_pre_filters + n_add_filters,

kernel_size=pre_filter_shape,

activation="relu",

padding="same",

input_shape=pre_layer.input_shape[1:])

new_pre_layer.build((None,) * (len(pre_filter_shape) + 1) + (pre_layer.input_shape[-1],))

new_pre_layer.set_weights((student_w1, student_b1))

new_next_layer = Dense(n_units, activation="relu")

n_new_total_weights = int(reduce(mul, student_w2.shape))

input_dim = int(n_new_total_weights / n_units)

new_next_layer.build((None, input_dim))

new_next_layer.set_weights((student_w2.reshape(input_dim, n_units), teacher_b2))

return new_pre_layer, new_next_layer

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 5

Instances

Project Name: keras-team/autokeras

Commit Name: 6cb84f4b742861e823015cac18535efa468b0d81

Time: 2017-12-15

Author: jhfjhfj1@gmail.com

File Name: autokeras/layer_transformer.py

Class Name:

Method Name: conv_dense_to_wider_layer

Project Name: HyperGAN/HyperGAN

Commit Name: 9950f411a0ec5ae5c9b98959a84f25fd10f1c9ea

Time: 2017-06-10

Author: mikkel@255bits.com

File Name: hypergan/multi_component.py

Class Name: MultiComponent

Method Name: combine

Project Name: asyml/texar

Commit Name: e9d94ff87f957b37a7dfe37b0013beedea6acb3d

Time: 2017-11-10

Author: junxianh2@gmail.com

File Name: txtgen/modules/connectors/connectors.py

Class Name:

Method Name: _mlp_transform