e9aea97df1dc7878827ac193ba75cbea0b3ee351,ludwig/models/modules/sequence_decoders.py,SequenceGeneratorDecoder,__init__,#SequenceGeneratorDecoder#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#,32

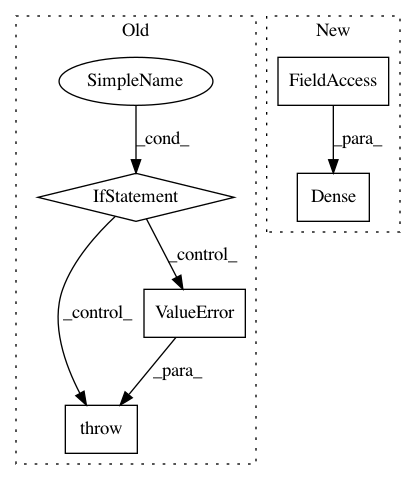

Before Change

self.decoder_cell = LSTMCell(state_size)

if attention_mechanism:

if attention_mechanism == "bahdanau":

pass

elif attention_mechanism == "luong":

self.attention_mechanism = tfa.seq2seq.LuongAttention(

state_size,

None, // todo tf2: confirm on need

memory_sequence_length=max_sequence_length // todo tf2: confirm inputs or output seq length

)

else:

raise ValueError(

"Attention specificaiton "{}" is invalid. Valid values are "

""bahdanau" or "luong".".format(self.attention_mechanism))

self.decoder_cell = tfa.seq2seq.AttentionWrapper(

self.decoder_cell,

self.attention_mechanism

)

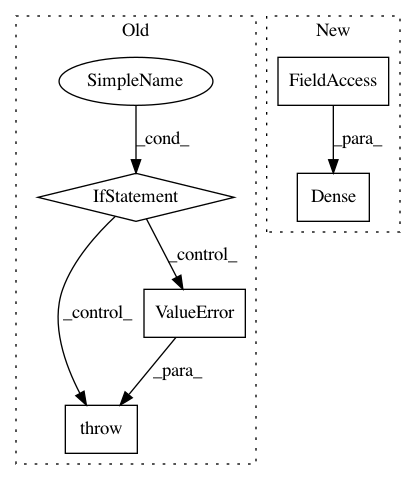

After Change

self.decoder_embedding = tf.keras.layers.Embedding(

input_dim=output_vocab_size,

output_dim=embedding_dims)

self.dense_layer = tf.keras.layers.Dense(output_vocab_size)

self.decoder_rnncell = tf.keras.layers.LSTMCell(rnn_units)

// Sampler

self.sampler = tfa.seq2seq.sampler.TrainingSampler()

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances

Project Name: uber/ludwig

Commit Name: e9aea97df1dc7878827ac193ba75cbea0b3ee351

Time: 2020-05-05

Author: jimthompson5802@gmail.com

File Name: ludwig/models/modules/sequence_decoders.py

Class Name: SequenceGeneratorDecoder

Method Name: __init__

Project Name: NVIDIA/OpenSeq2Seq

Commit Name: f0cecdb5ecce2d1bc555f01df98f61ebd80e4472

Time: 2019-01-15

Author: boris.ginsburg@gmail.com

File Name: open_seq2seq/parts/transformer/attention_layer.py

Class Name: Attention

Method Name: __init__

Project Name: tensorflow/privacy

Commit Name: 751eaead545d45bcc47bff7d82656b08c474b434

Time: 2019-06-10

Author: choquette.christopher@gmail.com

File Name: privacy/bolton/model.py

Class Name: Bolton

Method Name: compile