700abc65fd2172a2c6809dd9b72cf50fc2407772,allennlp/models/encoder_decoders/composed_seq2seq.py,ComposedSeq2Seq,__init__,#ComposedSeq2Seq#Any#Any#Any#Any#Any#Any#Any#,48

Before Change

self._encoder = encoder

self._decoder = decoder

if self._encoder.get_output_dim() != self._decoder.get_output_dim():

raise ConfigurationError(

f"Encoder output dimension {self._encoder.get_output_dim()} should be"

f" equal to decoder dimension {self._decoder.get_output_dim()}."

)

if tied_source_embedder_key:

// A bit of a ugly hack to tie embeddings.

// Works only for `BasicTextFieldEmbedder`, and since

// it can have multiple embedders, and `SeqDecoder` contains only a single embedder, we need

// the key to select the source embedder to replace it with the target embedder from the decoder.

if not isinstance(self._source_text_embedder, BasicTextFieldEmbedder):

raise ConfigurationError(

"Unable to tie embeddings,"

"Source text embedder is not an instance of `BasicTextFieldEmbedder`."

)

source_embedder = self._source_text_embedder._token_embedders[tied_source_embedder_key]

if not isinstance(source_embedder, Embedding):

raise ConfigurationError(

After Change

encoder: Seq2SeqEncoder,

decoder: SeqDecoder,

tied_source_embedder_key: Optional[str] = None,

initializer: InitializerApplicator = InitializerApplicator(),

**kwargs,

) -> None:

super().__init__(vocab, **kwargs)

self._source_text_embedder = source_text_embedder

self._encoder = encoder

self._decoder = decoder

if self._encoder.get_output_dim() != self._decoder.get_output_dim():

raise ConfigurationError(

f"Encoder output dimension {self._encoder.get_output_dim()} should be"

f" equal to decoder dimension {self._decoder.get_output_dim()}."

)

if tied_source_embedder_key:

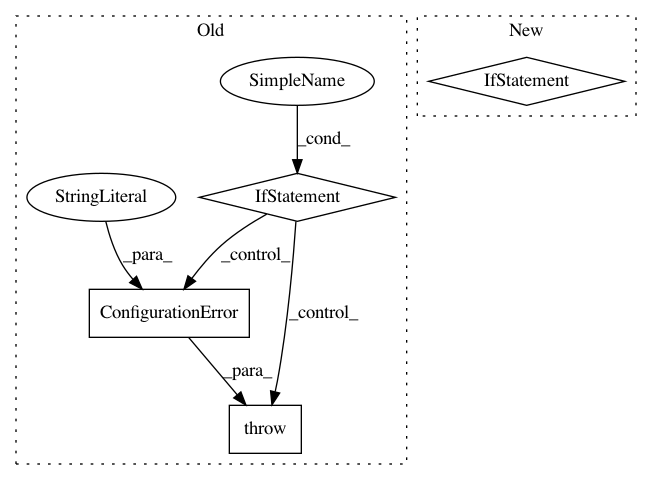

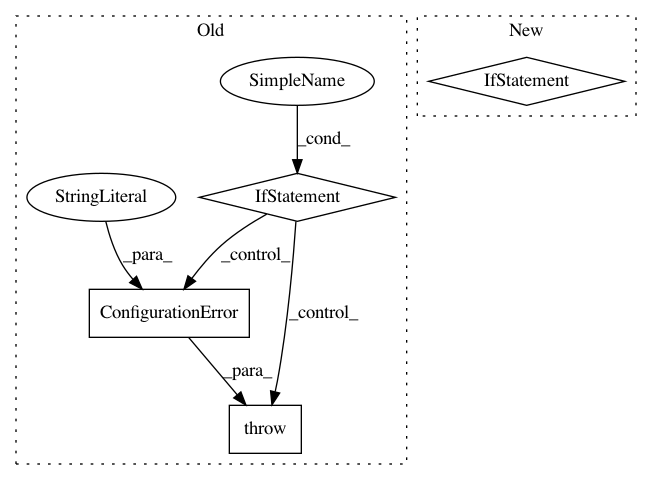

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: allenai/allennlp

Commit Name: 700abc65fd2172a2c6809dd9b72cf50fc2407772

Time: 2020-02-03

Author: mattg@allenai.org

File Name: allennlp/models/encoder_decoders/composed_seq2seq.py

Class Name: ComposedSeq2Seq

Method Name: __init__

Project Name: allenai/allennlp

Commit Name: ce060badd12d3047e3af81cf97d0b62805e397e5

Time: 2018-12-20

Author: brendanr@allenai.org

File Name: allennlp/models/bidirectional_lm.py

Class Name: BidirectionalLanguageModel

Method Name: forward

Project Name: allenai/allennlp

Commit Name: a58eb2cc4ab506f1611c06a308cb6a2ae710707b

Time: 2017-09-26

Author: joelgrus@gmail.com

File Name: allennlp/training/trainer.py

Class Name: Trainer

Method Name: _restore_checkpoint