0ad33d606682537466f3430fc6d6ac7d47460f1a,beginner_source/examples_autograd/two_layer_net_custom_function.py,,,#,50

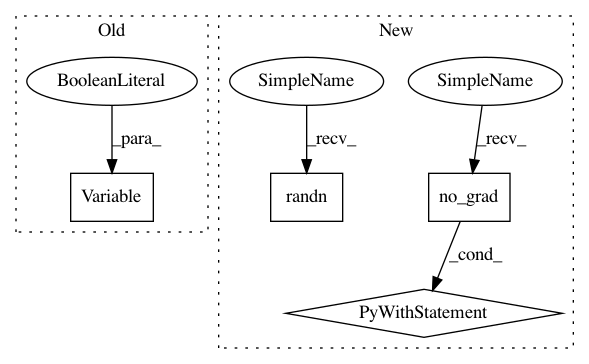

Before Change

// Create random Tensors to hold input and outputs, and wrap them in Variables.

x = Variable(torch.randn(N, D_in).type(dtype), requires_grad=False)

y = Variable(torch.randn(N, D_out).type(dtype), requires_grad=False)

// Create random Tensors for weights, and wrap them in Variables.

w1 = Variable(torch.randn(D_in, H).type(dtype), requires_grad=True)

w2 = Variable(torch.randn(H, D_out).type(dtype), requires_grad=True)After Change

// Create random Tensors for weights.

w1 = torch.randn(D_in, H, device=device, dtype=dtype, requires_grad=True)

w2 = torch.randn(H, D_out, device=device, dtype=dtype, requires_grad=True)

learning_rate = 1e-6

for t in range(500):

// To apply our Function, we use Function.apply method. We alias this as "relu".

relu = MyReLU.apply

// Forward pass: compute predicted y using operations; we compute

// ReLU using our custom autograd operation.

y_pred = relu(x.mm(w1)).mm(w2)

// Compute and print loss

loss = (y_pred - y).pow(2).sum()

print(t, loss.item())

// Use autograd to compute the backward pass.

loss.backward()

// Update weights using gradient descent

with torch.no_grad():

w1 -= learning_rate * w1.grad

w2 -= learning_rate * w2.grad

// Manually zero the gradients after updating weights

w1.grad.zero_()

w2.grad.zero_()

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances Project Name: pytorch/tutorials

Commit Name: 0ad33d606682537466f3430fc6d6ac7d47460f1a

Time: 2018-04-24

Author: soumith@gmail.com

File Name: beginner_source/examples_autograd/two_layer_net_custom_function.py

Class Name:

Method Name:

Project Name: pytorch/tutorials

Commit Name: 0ad33d606682537466f3430fc6d6ac7d47460f1a

Time: 2018-04-24

Author: soumith@gmail.com

File Name: beginner_source/former_torchies/autograd_tutorial.py

Class Name:

Method Name:

Project Name: pytorch/tutorials

Commit Name: 0ad33d606682537466f3430fc6d6ac7d47460f1a

Time: 2018-04-24

Author: soumith@gmail.com

File Name: beginner_source/examples_autograd/two_layer_net_custom_function.py

Class Name:

Method Name:

Project Name: pytorch/tutorials

Commit Name: 0ad33d606682537466f3430fc6d6ac7d47460f1a

Time: 2018-04-24

Author: soumith@gmail.com

File Name: beginner_source/blitz/autograd_tutorial.py

Class Name:

Method Name: