1a693073cd01fffa7cb3f018b7221459703119f9,ch09/01_cartpole_dqn.py,,,#,48

Before Change

optimizer = optim.Adam(net.parameters(), lr=LEARNING_RATE)

mse_loss = nn.MSELoss()

batch_states, batch_actions, batch_targets = [], [], []

total_rewards = []

for step_idx, exp in enumerate(exp_source):

selector.epsilon = max(EPSILON_STOP, EPSILON_START - step_idx / EPSILON_STEPS)

After Change

continue

// sample batch

batch = replay_buffer.sample(BATCH_SIZE)

batch_states = [exp.state for exp in batch]

batch_actions = [exp.action for exp in batch]

batch_targets = [calc_target(net, exp.reward, exp.last_state)

for exp in batch]

// train

optimizer.zero_grad()

states_v = Variable(torch.from_numpy(np.array(batch_states, dtype=np.float32)))

net_q_v = net(states_v)

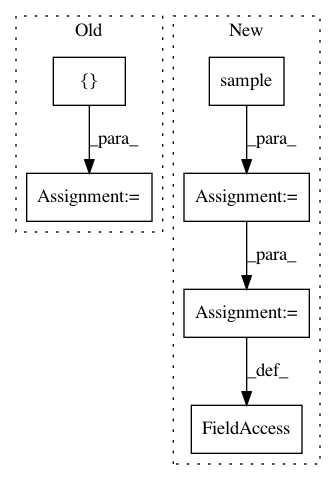

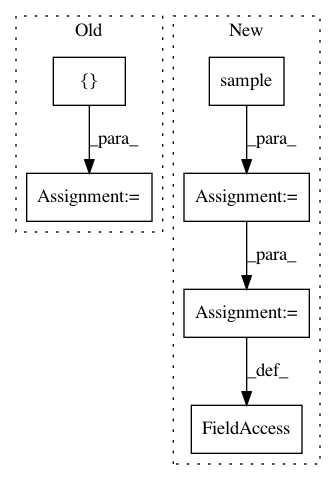

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 6

Instances

Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: 1a693073cd01fffa7cb3f018b7221459703119f9

Time: 2017-11-30

Author: max.lapan@gmail.com

File Name: ch09/01_cartpole_dqn.py

Class Name:

Method Name:

Project Name: chainer/chainerrl

Commit Name: 9801f28774b8d4aeed7d65025bf2451814c1db6d

Time: 2019-03-26

Author: prabhat.nagarajan@gmail.com

File Name: chainerrl/agents/ddpg.py

Class Name: DDPG

Method Name: batch_act

Project Name: HyperGAN/HyperGAN

Commit Name: 314f925d0a089d5a44331c95eb2bfefcae1b0bc7

Time: 2020-02-21

Author: mikkel@255bits.com

File Name: hypergan/samplers/batch_walk_sampler.py

Class Name: BatchWalkSampler

Method Name: __init__