ee235aff686c5ec1048138d799107801df20b819,sac/algos/sac.py,SAC,_init_actor_update,#SAC#,224

Before Change

self._vf_params = self._vf.get_params_internal()

log_target_t = self._qf.get_output_for(

self._obs_pl, tf.tanh(policy_dist.x_t), reuse=True) // N

corr = self._squash_correction(policy_dist.x_t)

if self._reparameterize:

After Change

self._vf_t = self._vf.get_output_for(self._observations_ph, reuse=True) // N

self._vf_params = self._vf.get_params_internal()

if self._regularize_actions:

D_s = actions.shape.as_list()[-1]

policy_prior = tf.contrib.distributions.MultivariateNormalDiag(

loc=tf.zeros(D_s), scale_diag=tf.ones(D_s))

policy_prior_log_probs = policy_prior.log_prob(actions)

else:

policy_prior_log_probs = 0.0

log_target = self._qf.get_output_for(

self._observations_ph, actions, reuse=True) // N

policy_kl_loss = tf.reduce_mean(log_pi * tf.stop_gradient(

log_pi - log_target + self._vf_t - policy_prior_log_probs))

if self._reparameterize:

kl_loss_t = tf.reduce_mean(log_pi_t - log_target_t - corr)

else:

kl_loss_t = tf.reduce_mean(log_pi_t * tf.stop_gradient(

log_pi_t - log_target_t - corr + self._vf_t))

policy_loss = (policy_kl_loss

+ policy_regularization_loss)

self._vf_loss_t = 0.5 * tf.reduce_mean((

self._vf_t

- tf.stop_gradient(log_target - log_pi + policy_prior_log_probs)

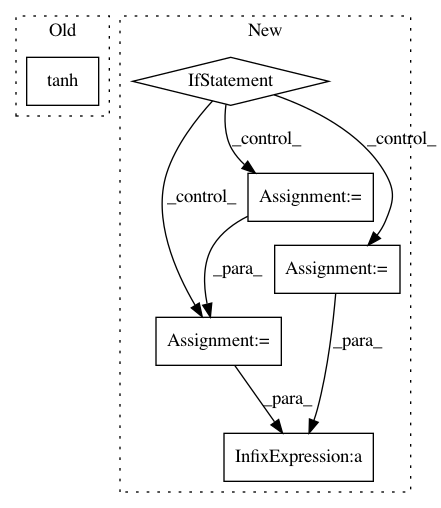

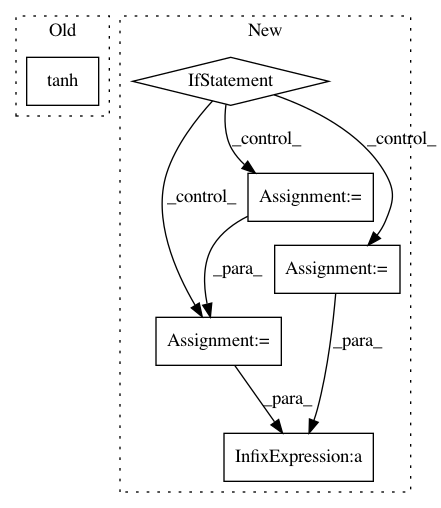

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 6

Instances

Project Name: rail-berkeley/softlearning

Commit Name: ee235aff686c5ec1048138d799107801df20b819

Time: 2018-05-22

Author: kristian.hartikainen@gmail.com

File Name: sac/algos/sac.py

Class Name: SAC

Method Name: _init_actor_update

Project Name: NifTK/NiftyNet

Commit Name: 30dfa316d689a232c2e6deaa813827be52aba961

Time: 2017-09-12

Author: wenqi.li@ucl.ac.uk

File Name: niftynet/network/simulator_gan.py

Class Name: ImageGenerator

Method Name: layer_op

Project Name: rail-berkeley/softlearning

Commit Name: 613574cdf27ee13c670f2bb4239d42f69e10cfc5

Time: 2018-05-22

Author: kristian.hartikainen@gmail.com

File Name: sac/policies/real_nvp.py

Class Name: RealNVPPolicy

Method Name: build