70888e622cf22e66188a74a0c29cf8d5f51ad9f9,basic/model.py,Model,_build_loss,#Model#,263

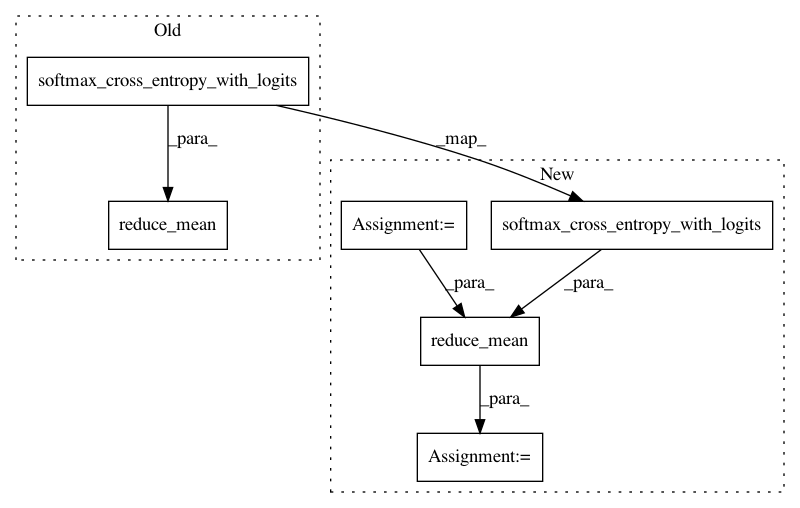

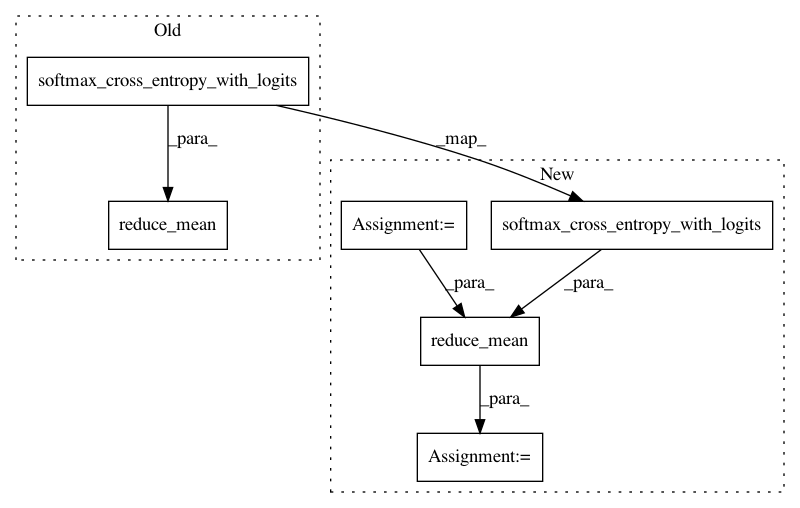

Before Change

N, M, JX, JQ, VW, VC = \

config.batch_size, config.max_num_sents, config.max_sent_size, \

config.max_ques_size, config.word_vocab_size, config.char_vocab_size

ce_loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(

self.logits, tf.cast(tf.reshape(self.y, [-1, M * JX]), "float")))

tf.add_to_collection("losses", ce_loss)

ce_loss2 = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(

self.logits2, tf.cast(tf.reshape(self.y2, [-1, M * JX]), "float")))

tf.add_to_collection("losses", ce_loss2)

After Change

N, M, JX, JQ, VW, VC = \

config.batch_size, config.max_num_sents, config.max_sent_size, \

config.max_ques_size, config.word_vocab_size, config.char_vocab_size

loss_mask = tf.reduce_max(tf.cast(self.q_mask, "float"), 1)

losses = tf.nn.softmax_cross_entropy_with_logits(

self.logits, tf.cast(tf.reshape(self.y, [-1, M * JX]), "float"))

ce_loss = tf.reduce_mean(loss_mask * losses)

tf.add_to_collection("losses", ce_loss)

ce_loss2 = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(

self.logits2, tf.cast(tf.reshape(self.y2, [-1, M * JX]), "float")))

tf.add_to_collection("losses", ce_loss2)

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 6

Instances

Project Name: wenwei202/iss-rnns

Commit Name: 70888e622cf22e66188a74a0c29cf8d5f51ad9f9

Time: 2016-10-23

Author: seominjoon@gmail.com

File Name: basic/model.py

Class Name: Model

Method Name: _build_loss

Project Name: dhlab-epfl/dhSegment

Commit Name: 3d7a7eac0c0b3e8718bfe9c83df323a37fb2f546

Time: 2018-01-17

Author: seg.benoit@gmail.com

File Name: doc_seg/model.py

Class Name:

Method Name: model_fn

Project Name: jakeret/tf_unet

Commit Name: 31516c4984224611174203a9822e102f94def3ac

Time: 2017-01-08

Author: jakeret@phys.ethz.ch

File Name: tf_unet/unet.py

Class Name: Unet

Method Name: _get_cost