247203f29b7e841204c76d922c1ea5b2680c3663,reagent/models/seq2slate.py,DecoderPyTorch,forward,#DecoderPyTorch#Any#Any#Any#Any#,213

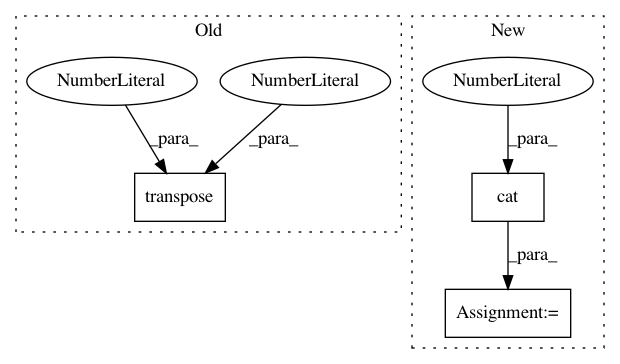

Before Change

self.num_heads = num_heads

def forward(self, tgt, memory, tgt_src_mask, tgt_tgt_mask):

tgt = tgt.transpose(0, 1)

memory = memory.transpose(0, 1)

// Pytorch assumes:

// (1) mask is bool

// (2) True -> item should be ignored in attention

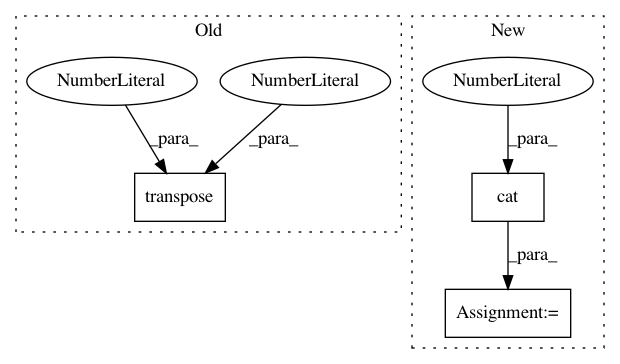

After Change

probs_for_placeholders = torch.zeros(

batch_size, tgt_seq_len, 2, device=output.device

)

probs = torch.cat((probs_for_placeholders, output), dim=2)

return probs

class MultiHeadedAttention(nn.Module):

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 3

Instances

Project Name: facebookresearch/Horizon

Commit Name: 247203f29b7e841204c76d922c1ea5b2680c3663

Time: 2020-12-08

Author: czxttkl@fb.com

File Name: reagent/models/seq2slate.py

Class Name: DecoderPyTorch

Method Name: forward

Project Name: arraiy/torchgeometry

Commit Name: 50839f8ed95147c71f9f045495ed45380a2ce513

Time: 2019-11-19

Author: priba@cvc.uab.cat

File Name: test/color/test_hls.py

Class Name: TestRgbToHls

Method Name: test_nan_rgb_to_hls

Project Name: OpenNMT/OpenNMT-py

Commit Name: c13a558767cbc19b612968eb4d01a1f26d5df688

Time: 2017-06-10

Author: wangqian5730@gmail.com

File Name: onmt/Models.py

Class Name: NMTModel

Method Name: _fix_enc_hidden