f9b98760f445fc0219cfc9c4cada2b5f9d35ab1b,basic/model.py,Model,_build_loss,#Model#,219

Before Change

loss_mask = tf.reduce_max(tf.cast(self.q_mask, "float"), 1)

if config.wy:

if config.na:

na = tf.reshape(self.na, [-1, 1])

concat_y = tf.concat(1, [na, tf.reshape(self.wy, [-1, M * JX])])

losses = tf.nn.softmax_cross_entropy_with_logits(self.concat_logits, tf.cast(concat_y, "float"))

else:

losses = tf.nn.softmax_cross_entropy_with_logits(

self.logits2, tf.cast(tf.reshape(self.wy, [-1, M * JX]), "float"))

ce_loss = tf.reduce_mean(loss_mask * losses)

tf.add_to_collection("losses", ce_loss)

else:

After Change

tf.reshape(self.logits2, [-1, M, JX]), tf.cast(self.wy, "float")) // [N, M, JX]

num_pos = tf.reduce_sum(tf.cast(self.wy, "float"))

num_neg = tf.reduce_sum(tf.cast(self.x_mask, "float")) - num_pos

damp_ratio = num_pos / num_neg

dampened_losses = losses * (

(tf.cast(self.x_mask, "float") - tf.cast(self.wy, "float")) * damp_ratio + tf.cast(self.wy, "float"))

new_losses = tf.reduce_sum(dampened_losses, [1, 2])

ce_loss = tf.reduce_mean(loss_mask * new_losses)

if config.na:

na = tf.reshape(self.na, [-1, 1])

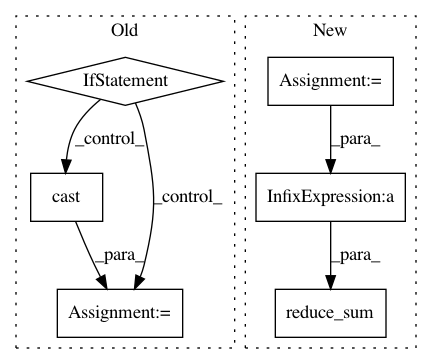

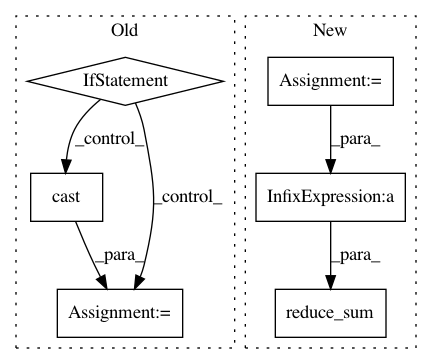

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 6

Instances

Project Name: wenwei202/iss-rnns

Commit Name: f9b98760f445fc0219cfc9c4cada2b5f9d35ab1b

Time: 2017-01-24

Author: seominjoon@gmail.com

File Name: basic/model.py

Class Name: Model

Method Name: _build_loss

Project Name: GPflow/GPflow

Commit Name: bd1e9c04b48dd5ccca9619d5eaa2595a358bdb08

Time: 2020-01-31

Author: st--@users.noreply.github.com

File Name: gpflow/kernels/misc.py

Class Name: Coregion

Method Name: K

Project Name: NifTK/NiftyNet

Commit Name: 946c74ea83f93967e28c585d0bbcd17afbfebb13

Time: 2018-05-05

Author: wenqi.li@ucl.ac.uk

File Name: niftynet/layer/loss_segmentation.py

Class Name:

Method Name: cross_entropy