a0cf5566d88533c5caa7a490beb6eb0760eee9b4,torch/optim/sgd.py,SGD,step,#SGD#Any#,76

Before Change

if p.grad is None:

continue

d_p = p.grad

if weight_decay != 0:

d_p = d_p.add(p, alpha=weight_decay)

if momentum != 0:

param_state = self.state[p]

if "momentum_buffer" not in param_state:

buf = param_state["momentum_buffer"] = torch.clone(d_p).detach()After Change

nesterov)

// update momentum_buffers in state

for p, momentum_buffer in zip(params_with_grad, momentum_buffer_list):

state = self.state[p]

state["momentum_buffer"] = momentum_buffer

return loss

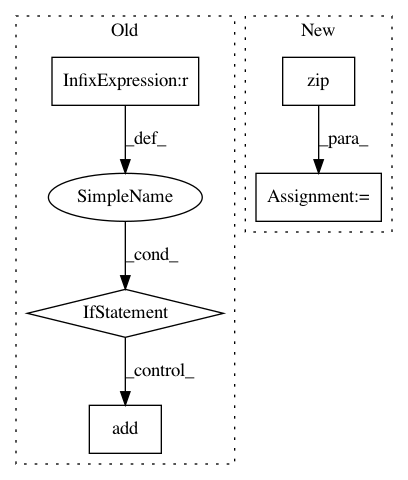

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances Project Name: pytorch/pytorch

Commit Name: a0cf5566d88533c5caa7a490beb6eb0760eee9b4

Time: 2021-01-21

Author: wanchaol@users.noreply.github.com

File Name: torch/optim/sgd.py

Class Name: SGD

Method Name: step

Project Name: facebookresearch/ParlAI

Commit Name: 72c304fa7cac16ed19d8bc75a017f17c8073dd2f

Time: 2020-02-13

Author: roller@fb.com

File Name: parlai/core/torch_generator_agent.py

Class Name: TorchGeneratorAgent

Method Name: _compute_fairseq_bleu

Project Name: biotite-dev/biotite

Commit Name: 1675e2873db77528ef1dee6fc49aaccfca9a369b

Time: 2020-11-27

Author: tom.mueller@beachouse.de

File Name: src/biotite/structure/dotbracket.py

Class Name:

Method Name: dot_bracket