1f7f6d5b7481195b0214e835bb5d782db768d71c,parlai/agents/transformer/modules.py,TransformerEncoder,__init__,#TransformerEncoder#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#,201

Before Change

embedding_size is None or embedding_size == embedding.weight.shape[1]

), "Embedding dim must match the embedding size."

if embedding is not None:

self.embeddings = embedding

else:

assert False

assert padding_idx is not None

self.embeddings = nn.Embedding(

vocabulary_size, embedding_size, padding_idx=padding_idx

)

nn.init.normal_(self.embeddings.weight, 0, embedding_size ** -0.5)

// create the positional embeddings

self.position_embeddings = nn.Embedding(n_positions, embedding_size)

if not learn_positional_embeddings:

create_position_codes(

n_positions, embedding_size, out=self.position_embeddings.weight

After Change

padding_idx=None,

n_positions=1024,

):

super().__init__()

self.embedding_size = embedding_size

self.ffn_size = ffn_size

self.n_layers = n_layers

self.n_heads = n_heads

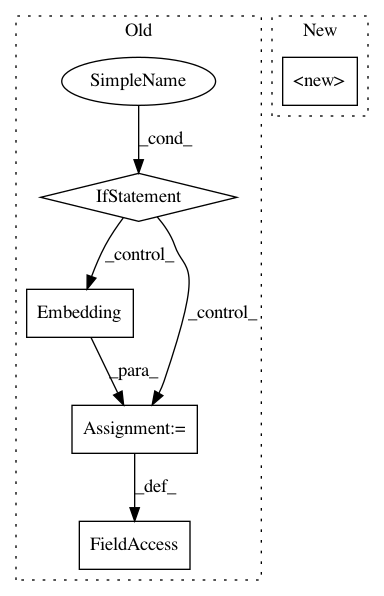

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances

Project Name: facebookresearch/ParlAI

Commit Name: 1f7f6d5b7481195b0214e835bb5d782db768d71c

Time: 2019-02-28

Author: roller@fb.com

File Name: parlai/agents/transformer/modules.py

Class Name: TransformerEncoder

Method Name: __init__

Project Name: stellargraph/stellargraph

Commit Name: 292ac3d96149788275b95842c272ea605e9588a4

Time: 2020-06-04

Author: Huon.Wilson@data61.csiro.au

File Name: stellargraph/layer/knowledge_graph.py

Class Name: RotatE

Method Name: __init__

Project Name: mozilla/TTS

Commit Name: 14a4d1a061872622d992ec39369bd24a78e292cc

Time: 2019-09-12

Author: egolge@mozilla.com

File Name: models/tacotrongst.py

Class Name: TacotronGST

Method Name: __init__