bafeed46fb76fa337a771ebb41d65bb95039565a,fairseq/trainer.py,Trainer,train_step,#Trainer#Any#Any#Any#,261

Before Change

// printed out if another exception happens.

// NB(jerry): added a flush to mitigate this

print(msg, file=sys.stderr)

if torch.cuda.is_available() and hasattr(torch.cuda, "memory_summary"):

for device_idx in range(torch.cuda.device_count()):

print(torch.cuda.memory_summary(device=device_idx),

file=sys.stderr)

sys.stderr.flush()

if raise_oom:

raise ValueError(msg)After Change

self.zero_grad()

logging_output = None

except RuntimeError as e:

if "out of memory" in str(e) :

self._log_oom(e)

print("| ERROR: OOM during optimization, irrecoverable")

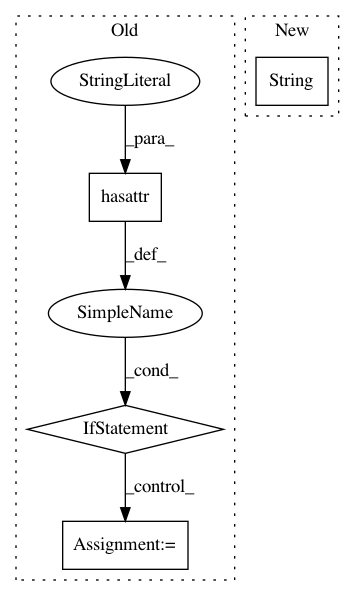

raise eIn pattern: SUPERPATTERN

Frequency: 4

Non-data size: 4

Instances Project Name: pytorch/fairseq

Commit Name: bafeed46fb76fa337a771ebb41d65bb95039565a

Time: 2019-11-06

Author: noreplyspamblackhole@gmail.com

File Name: fairseq/trainer.py

Class Name: Trainer

Method Name: train_step

Project Name: geekcomputers/Python

Commit Name: 8973e7b7acda4145d88621568f4e0a8751e24baa

Time: 2016-07-18

Author: myownway224533@gmail.com

File Name: osinfo.py

Class Name:

Method Name:

Project Name: ilastik/ilastik

Commit Name: 418a282dffada3ec45b6c2e9625f5708ebe8aa38

Time: 2014-05-20

Author: bergs@janelia.hhmi.org

File Name: lazyflow/operators/ioOperators/opInputDataReader.py

Class Name: OpInputDataReader

Method Name: _attemptOpenAsHdf5