9a9f0b65c5344878582772337a8e4b94cff9c747,official/nlp/modeling/layers/rezero_transformer.py,ReZeroTransformer,build,#ReZeroTransformer#Any#,89

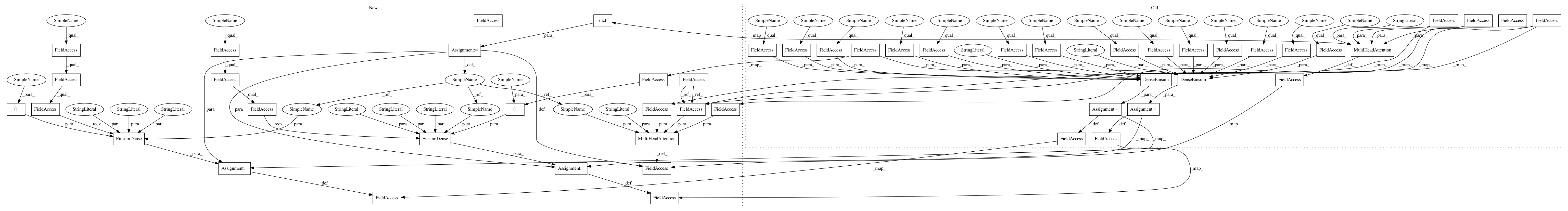

Before Change

"heads (%d)" % (hidden_size, self._num_heads))

self._attention_head_size = int(hidden_size // self._num_heads)

self._attention_layer = attention.MultiHeadAttention(

num_heads=self._num_heads,

key_size=self._attention_head_size,

dropout=self._attention_dropout_rate,

kernel_initializer=self._kernel_initializer,

bias_initializer=self._bias_initializer,

kernel_regularizer=self._kernel_regularizer,

bias_regularizer=self._bias_regularizer,

activity_regularizer=self._activity_regularizer,

kernel_constraint=self._kernel_constraint,

bias_constraint=self._bias_constraint,

name="self_attention")

self._attention_dropout = tf.keras.layers.Dropout(rate=self._dropout_rate)

if self._use_layer_norm:

// Use float32 in layernorm for numeric stability.

// It is probably safe in mixed_float16, but we haven"t validated this yet.

self._attention_layer_norm = (

tf.keras.layers.LayerNormalization(

name="self_attention_layer_norm",

axis=-1,

epsilon=1e-12,

dtype=tf.float32))

self._intermediate_dense = dense_einsum.DenseEinsum(

output_shape=self._intermediate_size,

activation=None,

kernel_initializer=self._kernel_initializer,

bias_initializer=self._bias_initializer,

kernel_regularizer=self._kernel_regularizer,

bias_regularizer=self._bias_regularizer,

activity_regularizer=self._activity_regularizer,

kernel_constraint=self._kernel_constraint,

bias_constraint=self._bias_constraint,

name="intermediate")

policy = tf.keras.mixed_precision.experimental.global_policy()

if policy.name == "mixed_bfloat16":

// bfloat16 causes BERT with the LAMB optimizer to not converge

// as well, so we use float32.

// TODO(b/154538392): Investigate this.

policy = tf.float32

self._intermediate_activation_layer = tf.keras.layers.Activation(

self._intermediate_activation, dtype=policy)

self._output_dense = dense_einsum.DenseEinsum(

output_shape=hidden_size,

kernel_initializer=self._kernel_initializer,

bias_initializer=self._bias_initializer,

kernel_regularizer=self._kernel_regularizer,

bias_regularizer=self._bias_regularizer,

activity_regularizer=self._activity_regularizer,

kernel_constraint=self._kernel_constraint,

bias_constraint=self._bias_constraint,

name="output")

self._output_dropout = tf.keras.layers.Dropout(rate=self._dropout_rate)

if self._use_layer_norm:

// Use float32 in layernorm for numeric stability.

self._output_layer_norm = tf.keras.layers.LayerNormalization(

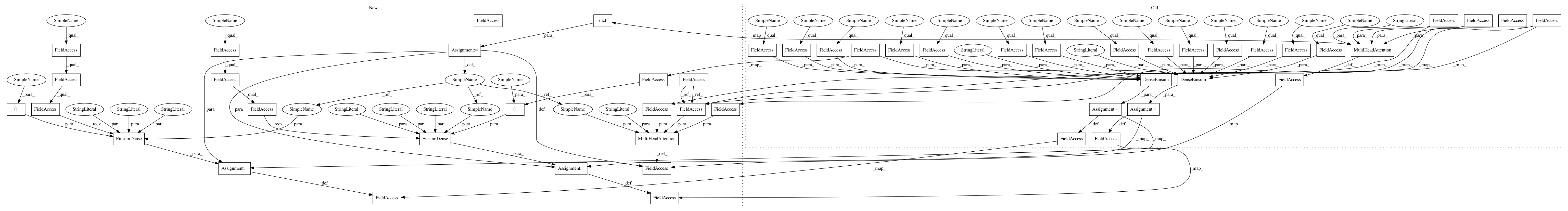

After Change

raise ValueError(

"The input size (%d) is not a multiple of the number of attention "

"heads (%d)" % (hidden_size, self._num_heads))

self._attention_head_size = int(hidden_size // self._num_heads)

common_kwargs = dict(

kernel_initializer=self._kernel_initializer,

bias_initializer=self._bias_initializer,

kernel_regularizer=self._kernel_regularizer,

bias_regularizer=self._bias_regularizer,

activity_regularizer=self._activity_regularizer,

kernel_constraint=self._kernel_constraint,

bias_constraint=self._bias_constraint)

self._attention_layer = attention.MultiHeadAttention(

num_heads=self._num_heads,

key_size=self._attention_head_size,

dropout=self._attention_dropout_rate,

name="self_attention",

**common_kwargs)

self._attention_dropout = tf.keras.layers.Dropout(rate=self._dropout_rate)

if self._use_layer_norm:

// Use float32 in layernorm for numeric stability.

// It is probably safe in mixed_float16, but we haven"t validated this yet.

self._attention_layer_norm = (

tf.keras.layers.LayerNormalization(

name="self_attention_layer_norm",

axis=-1,

epsilon=1e-12,

dtype=tf.float32))

self._intermediate_dense = tf.keras.layers.experimental.EinsumDense(

"abc,cd->abd",

output_shape=(None, self._intermediate_size),

bias_axes="d",

name="intermediate",

**common_kwargs)

policy = tf.keras.mixed_precision.experimental.global_policy()

if policy.name == "mixed_bfloat16":

// bfloat16 causes BERT with the LAMB optimizer to not converge

// as well, so we use float32.

// TODO(b/154538392): Investigate this.

policy = tf.float32

self._intermediate_activation_layer = tf.keras.layers.Activation(

self._intermediate_activation, dtype=policy)

self._output_dense = tf.keras.layers.experimental.EinsumDense(

"abc,cd->abd",

output_shape=(None, hidden_size),

bias_axes="d",

name="output",

**common_kwargs)

self._output_dropout = tf.keras.layers.Dropout(rate=self._dropout_rate)

if self._use_layer_norm:

// Use float32 in layernorm for numeric stability.

self._output_layer_norm = tf.keras.layers.LayerNormalization(

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 51

Instances

Project Name: tensorflow/models

Commit Name: 9a9f0b65c5344878582772337a8e4b94cff9c747

Time: 2020-07-08

Author: hongkuny@google.com

File Name: official/nlp/modeling/layers/rezero_transformer.py

Class Name: ReZeroTransformer

Method Name: build

Project Name: tensorflow/models

Commit Name: 43f5340f40697be2c27f632889f8bca919670d06

Time: 2020-07-08

Author: hongkuny@google.com

File Name: official/nlp/modeling/layers/transformer.py

Class Name: Transformer

Method Name: build

Project Name: tensorflow/models

Commit Name: 9a9f0b65c5344878582772337a8e4b94cff9c747

Time: 2020-07-08

Author: hongkuny@google.com

File Name: official/nlp/modeling/layers/rezero_transformer.py

Class Name: ReZeroTransformer

Method Name: build

Project Name: tensorflow/models

Commit Name: 9a9f0b65c5344878582772337a8e4b94cff9c747

Time: 2020-07-08

Author: hongkuny@google.com

File Name: official/nlp/modeling/layers/transformer.py

Class Name: Transformer

Method Name: build

Project Name: tensorflow/models

Commit Name: 43f5340f40697be2c27f632889f8bca919670d06

Time: 2020-07-08

Author: hongkuny@google.com

File Name: official/nlp/modeling/layers/rezero_transformer.py

Class Name: ReZeroTransformer

Method Name: build