29d7182447c4120057c116eb22c06d1d909eb3a1,fairseq/distributed_utils.py,,all_gather_list,#Any#Any#Any#,132

Before Change

enc = pickle.dumps(data)

enc_size = len(enc)

if enc_size + 2 > max_size:

raise ValueError("encoded data exceeds max_size: {}".format(enc_size + 2))

assert max_size < 255*256

cpu_buffer[0] = enc_size // 255 // this encoding works for max_size < 65k

After Change

enc = pickle.dumps(data)

enc_size = len(enc)

header_size = 4 // size of header that contains the length of the encoded data

size = header_size + enc_size

if size > max_size:

raise ValueError("encoded data size ({}) exceeds max_size ({})".format(size, max_size))

header = struct.pack(">I", enc_size)

cpu_buffer[:size] = torch.ByteTensor(list(header + enc))

start = rank * max_size

buffer[start:start + size].copy_(cpu_buffer[:size])

all_reduce(buffer, group=group)

try:

result = []

for i in range(world_size):

out_buffer = buffer[i * max_size:(i + 1) * max_size]

enc_size, = struct.unpack(">I", bytes(out_buffer[:header_size].tolist()))

if enc_size > 0:

result.append(pickle.loads(bytes(out_buffer[header_size:header_size + enc_size].tolist())))

return result

except pickle.UnpicklingError:

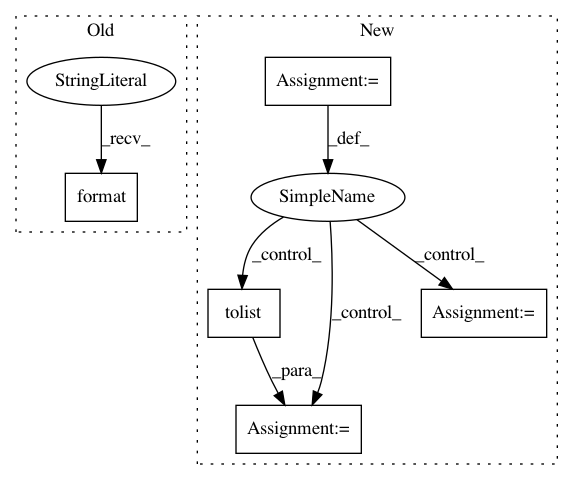

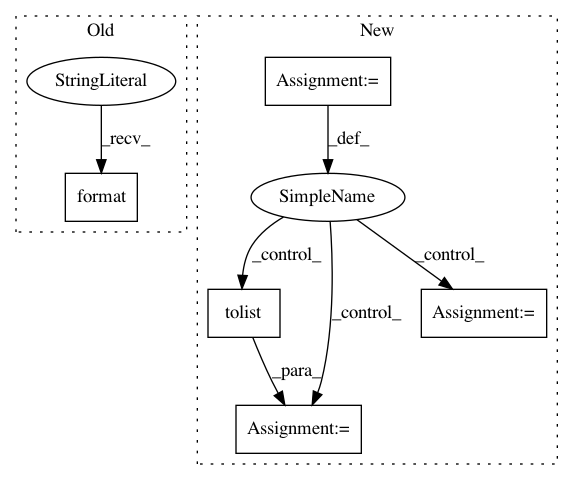

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances

Project Name: pytorch/fairseq

Commit Name: 29d7182447c4120057c116eb22c06d1d909eb3a1

Time: 2019-12-13

Author: yunwang@fb.com

File Name: fairseq/distributed_utils.py

Class Name:

Method Name: all_gather_list

Project Name: sony/nnabla

Commit Name: edd86f6318b411a42a1f287fa6359bbfd12fa71c

Time: 2019-06-24

Author: stephen.tiedemann@sony.com

File Name: python/src/nnabla/functions.py

Class Name:

Method Name: scatter_nd