641a28fbf0daff0ad1ad0f43d2c4b545cb6f9656,examples/reinforcement_learning/tutorial_cartpole_ac.py,Critic,learn,#Critic#Any#Any#Any#,142

Before Change

def learn(self, s, r, s_):

v_ = self.sess.run(self.v, {self.s: [s_]})

td_error, _ = self.sess.run([self.td_error, self.train_op], {self.s: [s], self.v_: v_, self.r: r})

return td_error

After Change

with tf.GradientTape() as tape:

v = self.model([s]).outputs

// TD_error = r + lambd * V(newS) - V(S)

td_error = r + LAMBDA * v_ - v

loss = tf.square(td_error)

grad = tape.gradient(loss, self.model.weights)

self.optimizer.apply_gradients(zip(grad, self.model.weights))

return td_error

actor = Actor(n_features=N_F, n_actions=N_A, lr=LR_A)

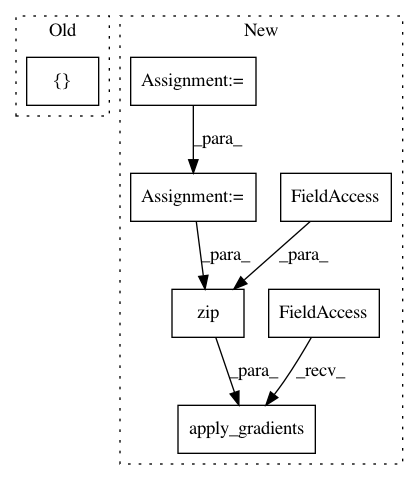

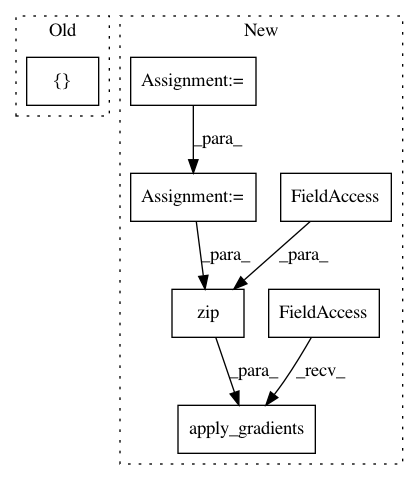

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 7

Instances

Project Name: tensorlayer/tensorlayer

Commit Name: 641a28fbf0daff0ad1ad0f43d2c4b545cb6f9656

Time: 2019-02-16

Author: dhsig552@163.com

File Name: examples/reinforcement_learning/tutorial_cartpole_ac.py

Class Name: Critic

Method Name: learn

Project Name: arnomoonens/yarll

Commit Name: 41024c61c0737b1beaea8fff8e00a947d6b6ee9b

Time: 2017-02-09

Author: x-006@hotmail.com

File Name: knowledge_transfer.py

Class Name: KnowledgeTransferLearner

Method Name: build_networks

Project Name: tensorlayer/tensorlayer

Commit Name: 641a28fbf0daff0ad1ad0f43d2c4b545cb6f9656

Time: 2019-02-16

Author: dhsig552@163.com

File Name: examples/reinforcement_learning/tutorial_cartpole_ac.py

Class Name: Actor

Method Name: learn