1207689fa1e6ff2d321ccc182be13825b4e2575e,pytext/models/embeddings/word_embedding.py,WordEmbedding,from_config,#Any#Any#Any#Any#Any#,41

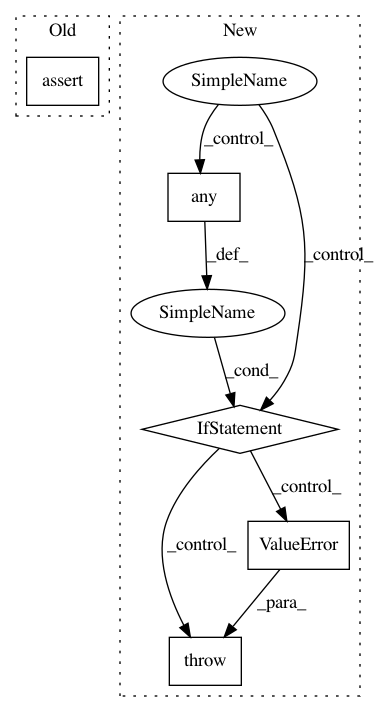

Before Change

if config.vocab_from_pretrained_embeddings:

// pretrained embeddings will get a freq count of 1

assert config.min_freq == 1, (

"If `vocab_from_pretrained_embeddings` is set, the vocab"s "

"`min_freq` must be 1"

)

if not config.vocab_from_train_data: // Reset token counter.

tensorizer.vocab_builder._counter = collections.Counter()

pretrained_vocab = pretrained_embedding.embed_vocab

if config.vocab_size:

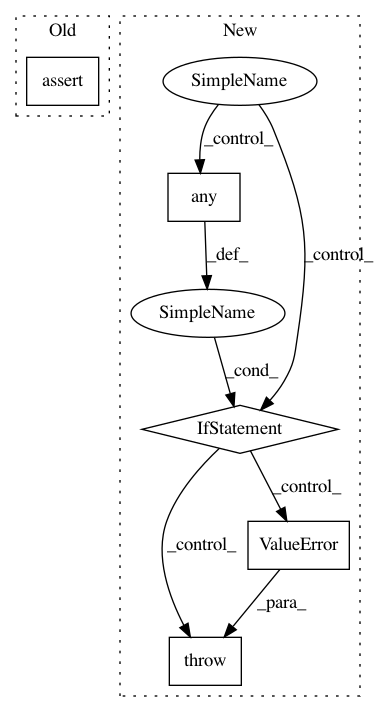

After Change

// We don"t need to load pretrained embeddings if we know the

// embedding weights are going to be loaded from a snapshot.

if config.pretrained_embeddings_path and not init_from_saved_state:

if not any(

vocab_file.filepath == config.pretrained_embeddings_path

for vocab_file in tensorizer.vocab_config.vocab_files

):

raise ValueError(

f"Tensorizer"s vocab files should include pretrained "

f"embeddings file {config.pretrained_embeddings_path}."

)

pretrained_embedding = PretrainedEmbedding(

config.pretrained_embeddings_path, // doesn"t support fbpkg

lowercase_tokens=config.lowercase_tokens,

)

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances

Project Name: facebookresearch/pytext

Commit Name: 1207689fa1e6ff2d321ccc182be13825b4e2575e

Time: 2019-07-12

Author: mikaell@fb.com

File Name: pytext/models/embeddings/word_embedding.py

Class Name: WordEmbedding

Method Name: from_config

Project Name: scikit-image/scikit-image

Commit Name: 6c47bd49ddb90e5196333224a4e6b211fb24d089

Time: 2016-06-16

Author: thomas.walter@mines-paristech.fr

File Name: skimage/feature/texture.py

Class Name:

Method Name: greycomatrix

Project Name: bethgelab/foolbox

Commit Name: bf635f90dae66e4ddd3e1f342dca925b3c99faf7

Time: 2020-02-11

Author: git@jonasrauber.de

File Name: foolbox/attacks/binarization.py

Class Name: BinarizationRefinementAttack

Method Name: __call__