db09dc1fb503ab8f7de69fa23e8d38742bda8e90,ch07/02_dqn_n_steps.py,,,#,12

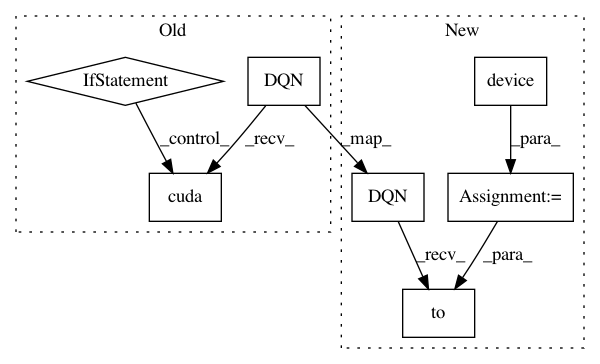

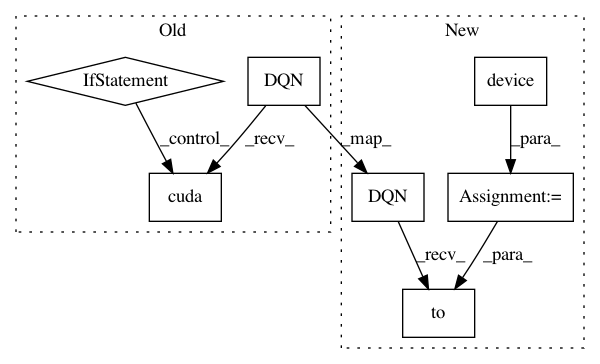

Before Change

env = ptan.common.wrappers.wrap_dqn(env)

writer = SummaryWriter(comment="-" + params["run_name"] + "-%d-step" % args.n)

net = dqn_model.DQN(env.observation_space.shape, env.action_space.n)

if args.cuda:

net.cuda()

tgt_net = ptan.agent.TargetNet(net)

selector = ptan.actions.EpsilonGreedyActionSelector(epsilon=params["epsilon_start"])

epsilon_tracker = common.EpsilonTracker(selector, params)

agent = ptan.agent.DQNAgent(net, selector, cuda=args.cuda)

After Change

parser.add_argument("--cuda", default=False, action="store_true", help="Enable cuda")

parser.add_argument("-n", default=REWARD_STEPS_DEFAULT, type=int, help="Count of steps to unroll Bellman")

args = parser.parse_args()

device = torch.device("cuda" if args.cuda else "cpu")

env = gym.make(params["env_name"])

env = ptan.common.wrappers.wrap_dqn(env)

writer = SummaryWriter(comment="-" + params["run_name"] + "-%d-step" % args.n)

net = dqn_model.DQN(env.observation_space.shape, env.action_space.n).to(device)

tgt_net = ptan.agent.TargetNet(net)

selector = ptan.actions.EpsilonGreedyActionSelector(epsilon=params["epsilon_start"])

epsilon_tracker = common.EpsilonTracker(selector, params)

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 7

Instances

Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: db09dc1fb503ab8f7de69fa23e8d38742bda8e90

Time: 2018-04-27

Author: max.lapan@gmail.com

File Name: ch07/02_dqn_n_steps.py

Class Name:

Method Name:

Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: db09dc1fb503ab8f7de69fa23e8d38742bda8e90

Time: 2018-04-27

Author: max.lapan@gmail.com

File Name: ch07/01_dqn_basic.py

Class Name:

Method Name:

Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: db09dc1fb503ab8f7de69fa23e8d38742bda8e90

Time: 2018-04-27

Author: max.lapan@gmail.com

File Name: ch07/03_dqn_double.py

Class Name:

Method Name: