94a995defe223eed0898f25d2332ba6178a92abe,ssd/engine/trainer.py,,do_train,#Any#Any#Any#Any#Any#Any#Any#,46

Before Change

if iteration % args.log_step == 0:

eta_seconds = int((trained_time / iteration) * (max_iter - iteration))

logger.info(

"Iter: {:06d}, Lr: {:.5f}, Cost: {:.2f}s, Eta: {}, ".format(iteration, optimizer.param_groups[0]["lr"],

time.time() - tic,

str(datetime.timedelta(seconds=eta_seconds))) +

"Loss: {:.3f}, ".format(losses_reduced.item()) +

"Regression Loss {:.3f}, ".format(loss_dict_reduced["regression_loss"].item()) +

"Classification Loss: {:.3f}".format(loss_dict_reduced["classification_loss"].item()))

if summary_writer:

global_step = iteration

After Change

time.time() - tic, str(datetime.timedelta(seconds=eta_seconds))),

"total_loss: {:.3f}".format(losses_reduced.item())

]

for loss_name, loss_item in loss_dict_reduced.items():

log_str.append("{}: {:.3f}".format(loss_name, loss_item.item()))

log_str = ", ".join(log_str)

logger.info(log_str)

if summary_writer:

global_step = iteration

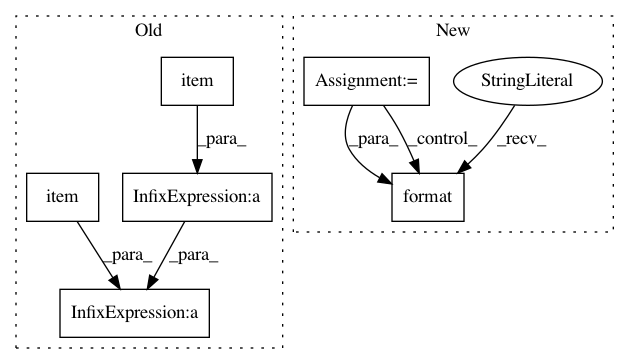

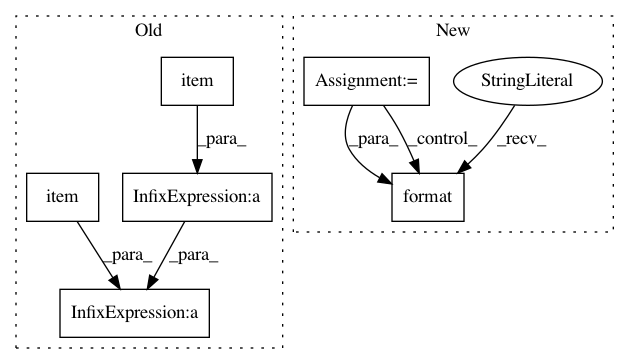

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 6

Instances

Project Name: lufficc/SSD

Commit Name: 94a995defe223eed0898f25d2332ba6178a92abe

Time: 2018-12-19

Author: luffy.lcc@gmail.com

File Name: ssd/engine/trainer.py

Class Name:

Method Name: do_train

Project Name: pytorch/fairseq

Commit Name: 29d7182447c4120057c116eb22c06d1d909eb3a1

Time: 2019-12-13

Author: yunwang@fb.com

File Name: fairseq/distributed_utils.py

Class Name:

Method Name: all_gather_list