ccbd460791dc0229302fcfda37ab7c4f3ce9e08a,Reinforcement_learning_TUT/10_A3C/A3C_discrete_action.py,,,#,166

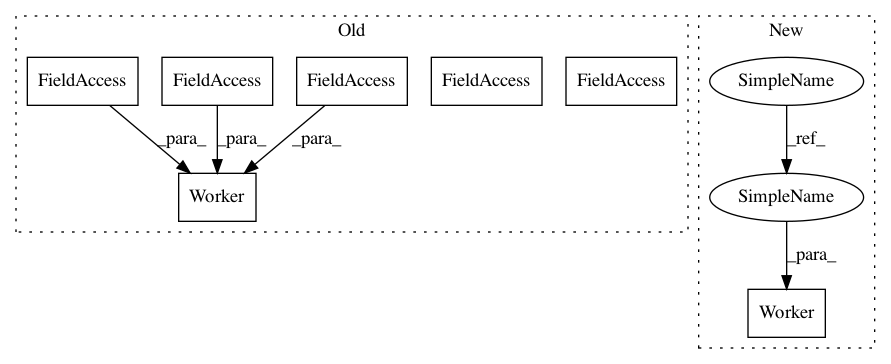

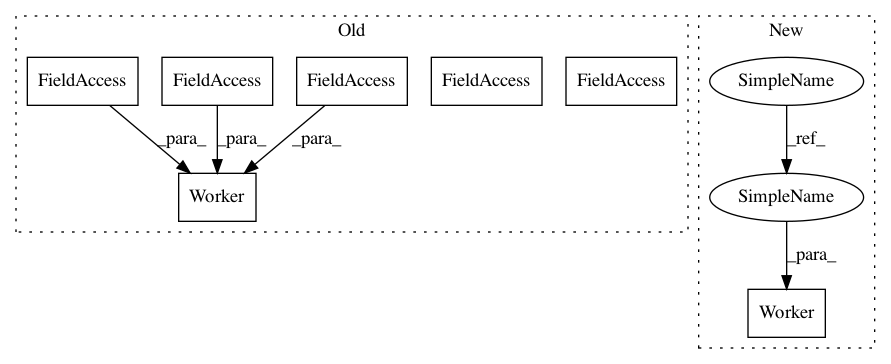

Before Change

for i in range(N_WORKERS):

i_name = "W_%i" % i // worker name

workers.append(

Worker(

gym.make(GAME).unwrapped, i_name, N_S, N_A, sess,

OPT_A, OPT_C, globalAC.a_params, globalAC.c_params

))

coord = tf.train.Coordinator()

sess.run(tf.global_variables_initializer())

After Change

COUNT_GLOBAL_EP = tf.assign(GLOBAL_EP, tf.add(GLOBAL_EP, tf.constant(1), name="step_ep"))

OPT_A = tf.train.RMSPropOptimizer(LR_A, name="RMSPropA")

OPT_C = tf.train.RMSPropOptimizer(LR_C, name="RMSPropC")

GLOBAL_AC = ACNet(GLOBAL_NET_SCOPE) // we only need its params

workers = []

// Create worker

for i in range(N_WORKERS):

i_name = "W_%i" % i // worker name

workers.append(Worker(i_name, GLOBAL_AC))

COORD = tf.train.Coordinator()

SESS.run(tf.global_variables_initializer())

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 7

Instances

Project Name: MorvanZhou/tutorials

Commit Name: ccbd460791dc0229302fcfda37ab7c4f3ce9e08a

Time: 2017-04-01

Author: morvanzhou@hotmail.com

File Name: Reinforcement_learning_TUT/10_A3C/A3C_discrete_action.py

Class Name:

Method Name:

Project Name: MorvanZhou/tutorials

Commit Name: ccbd460791dc0229302fcfda37ab7c4f3ce9e08a

Time: 2017-04-01

Author: morvanzhou@hotmail.com

File Name: Reinforcement_learning_TUT/10_A3C/A3C_discrete_action.py

Class Name:

Method Name:

Project Name: MorvanZhou/tutorials

Commit Name: 3ddf6764aace135b84b70f8e2c0afdcfdada2bca

Time: 2017-03-31

Author: morvanzhou@gmail.com

File Name: Reinforcement_learning_TUT/experiments/Solve_BipedalWalker/A3C.py

Class Name:

Method Name:

Project Name: MorvanZhou/tutorials

Commit Name: 4ee797b39a3e74400fb4abe4f5403bf7778bed20

Time: 2017-03-30

Author: morvanzhou@gmail.com

File Name: Reinforcement_learning_TUT/10_A3C/A3C_continuous_action.py

Class Name:

Method Name: