2d1945f4a3c39b72f99e9af87b5eb0e3cf015d2e,workers/data_refinery_workers/processors/create_compendia.py,,_create_result_objects,#Any#,387

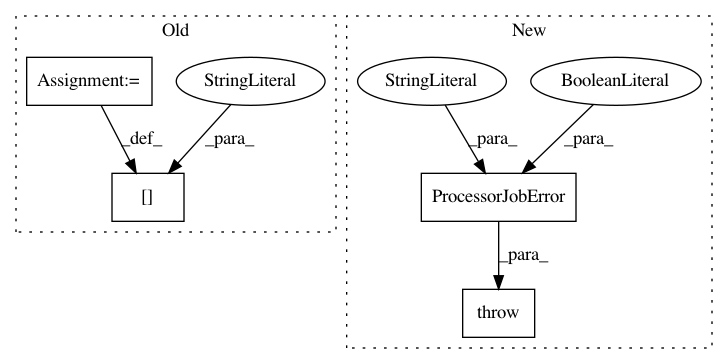

Before Change

archive_computed_file.sync_to_s3(S3_BUCKET_NAME, key)

job_context["result"] = result

job_context["computed_files"] = [archive_computed_file]

job_context["success"] = True

log_state("end create result object", job_context["job"].id, result_start)

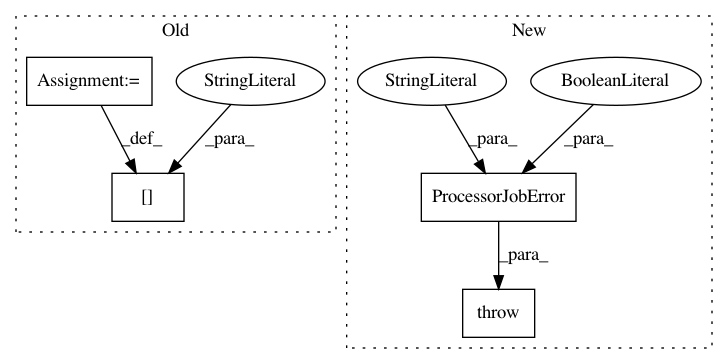

After Change

if uploaded_to_s3:

archive_computed_file.delete_local_file()

else:

raise utils.ProcessorJobError(

"Failed to upload compendia to S3",

success=False,

computed_file_id=archive_computed_file.id,

)

job_context["result"] = result

job_context["success"] = True

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances

Project Name: AlexsLemonade/refinebio

Commit Name: 2d1945f4a3c39b72f99e9af87b5eb0e3cf015d2e

Time: 2020-01-16

Author: arielsvn@gmail.com

File Name: workers/data_refinery_workers/processors/create_compendia.py

Class Name:

Method Name: _create_result_objects

Project Name: AlexsLemonade/refinebio

Commit Name: 6a1a1b23b269107121f3d1a97ec78a344fe35bb3

Time: 2019-12-23

Author: arielsvn@gmail.com

File Name: workers/data_refinery_workers/processors/smasher.py

Class Name:

Method Name: _notify

Project Name: AlexsLemonade/refinebio

Commit Name: c0833c03181a2a9a2ce50bf43281ba698f61887c

Time: 2019-12-24

Author: arielsvn@gmail.com

File Name: workers/data_refinery_workers/processors/smasher.py

Class Name:

Method Name: _smash_all