f5f63fb9c06cd626ff64a31b976e148c92ff99d1,OpenNMT/onmt/Optim.py,Optim,step,#Optim#,30

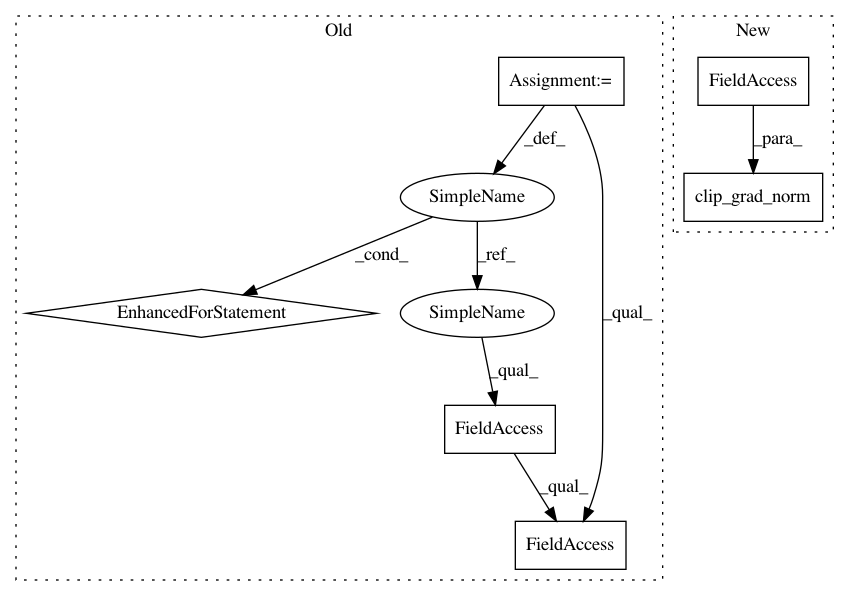

Before Change

grad_norm = math.sqrt(grad_norm)

shrinkage = self.max_grad_norm / grad_norm

for param in self.params:

if shrinkage < 1:

param.grad.data.mul_(shrinkage)

self.optimizer.step()

return grad_norm

// decay learning rate if val perf does not improve or we hit the start_decay_at limitAfter Change

def step(self):

// Compute gradients norm.

if self.max_grad_norm:

clip_grad_norm(self.params, self.max_grad_norm)

self.optimizer.step()

// decay learning rate if val perf does not improve or we hit the start_decay_at limit

def updateLearningRate(self, ppl, epoch):In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 6

Instances Project Name: pytorch/examples

Commit Name: f5f63fb9c06cd626ff64a31b976e148c92ff99d1

Time: 2017-03-14

Author: bryan.mccann.is@gmail.com

File Name: OpenNMT/onmt/Optim.py

Class Name: Optim

Method Name: step

Project Name: OpenNMT/OpenNMT-py

Commit Name: 57dac10ec6b131842667bf58746168d9e99de9b3

Time: 2017-03-14

Author: bryan.mccann.is@gmail.com

File Name: onmt/Optim.py

Class Name: Optim

Method Name: step

Project Name: pytorch/examples

Commit Name: f5f63fb9c06cd626ff64a31b976e148c92ff99d1

Time: 2017-03-14

Author: bryan.mccann.is@gmail.com

File Name: OpenNMT/onmt/Optim.py

Class Name: Optim

Method Name: step

Project Name: kengz/SLM-Lab

Commit Name: ff5da2b2bfd03234519927b966923e8baecf6616

Time: 2018-01-02

Author: lgraesser@users.noreply.github.com

File Name: slm_lab/agent/net/feedforward.py

Class Name: MLPNet

Method Name: training_step