3f9700868da4d09988b7dfbd7d5b3a9d5ea7d401,fairseq/multiprocessing_trainer.py,MultiprocessingTrainer,train_step,#MultiprocessingTrainer#Any#,127

Before Change

grad_denom = self.criterion.grad_denom(samples)

// forward pass, backward pass and gradient step

losses = [

self.call_async(rank, "_async_train_step", grad_denom=grad_denom)

for rank in range(self.num_replicas)

]

// aggregate losses and gradient norms

loss_dicts = Future.gen_list(losses)

loss_dict = self.criterion.aggregate(loss_dicts)

loss_dict["gnorm"] = loss_dicts[0]["gnorm"]

return loss_dict

After Change

self._scatter_samples(samples, replace_empty_samples=replace_empty_samples)

// forward pass

sample_sizes, logging_outputs = Future.gen_tuple_list([

self.call_async(rank, "_async_forward")

for rank in range(self.num_replicas)

])

// backward pass, all-reduce gradients and take an optimization step

grad_denom = self.criterion.__class__.grad_denom(sample_sizes)

grad_norms = Future.gen_list([

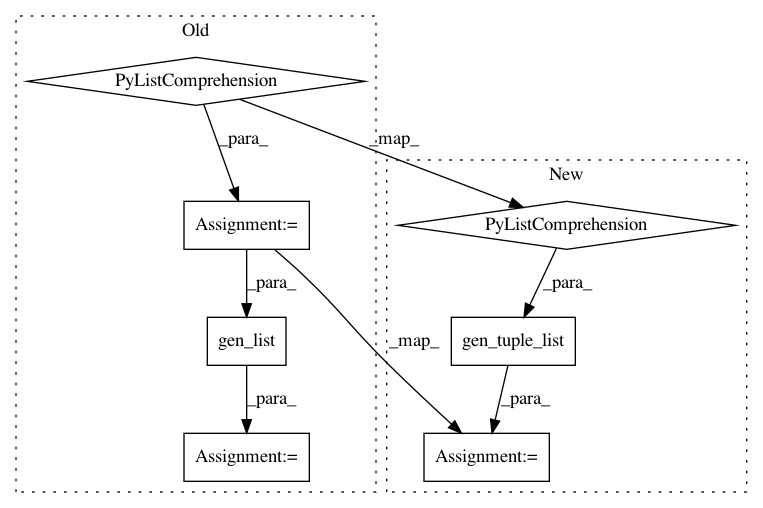

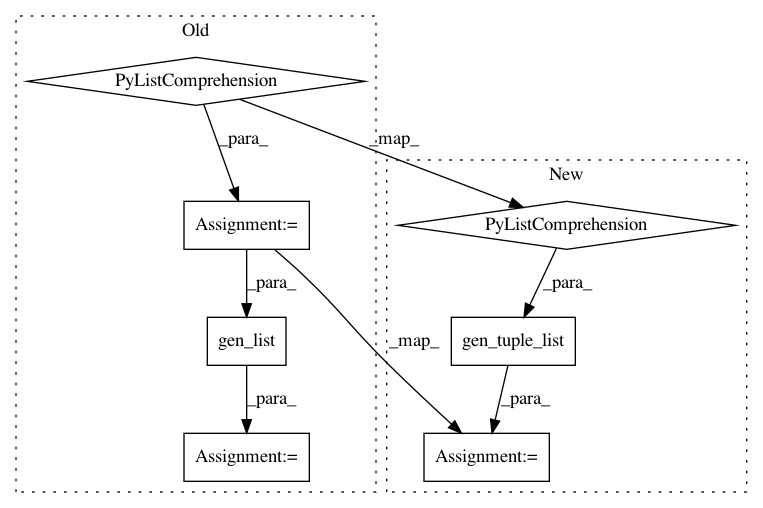

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 7

Instances

Project Name: pytorch/fairseq

Commit Name: 3f9700868da4d09988b7dfbd7d5b3a9d5ea7d401

Time: 2017-10-19

Author: myleott@fb.com

File Name: fairseq/multiprocessing_trainer.py

Class Name: MultiprocessingTrainer

Method Name: train_step

Project Name: elbayadm/attn2d

Commit Name: 3f9700868da4d09988b7dfbd7d5b3a9d5ea7d401

Time: 2017-10-19

Author: myleott@fb.com

File Name: fairseq/multiprocessing_trainer.py

Class Name: MultiprocessingTrainer

Method Name: valid_step