0a68639f4c33323274e7829b9349d0170dc6c8ea,train.py,,classifier,#,67

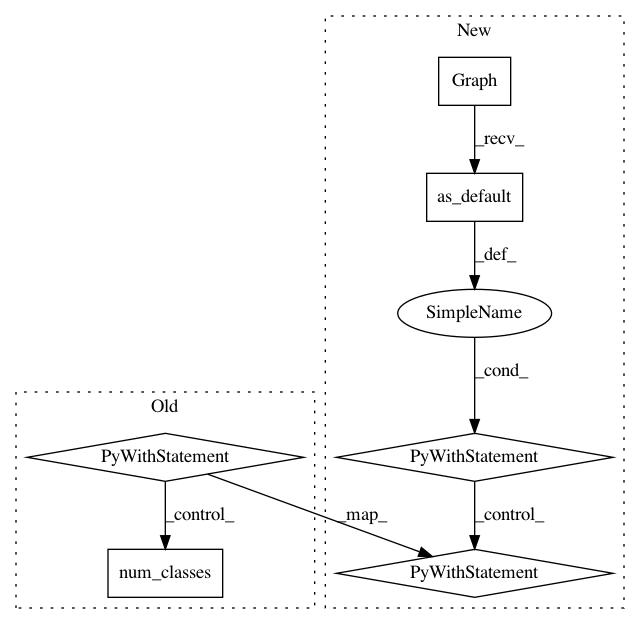

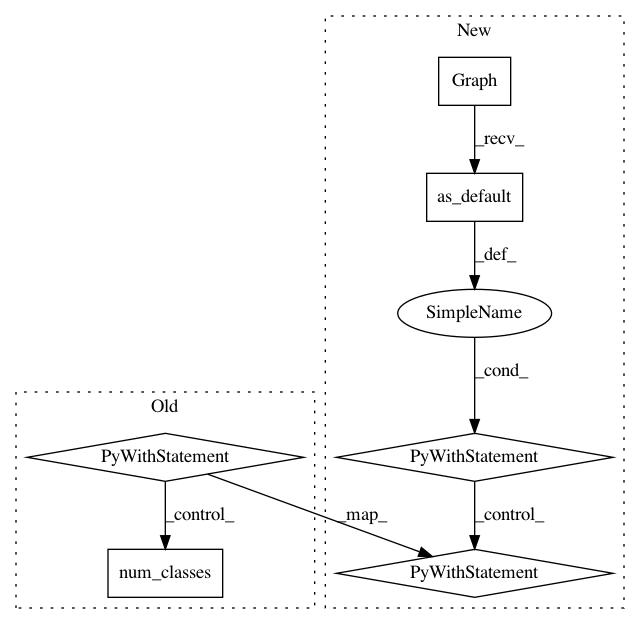

Before Change

best_va = 0.0

with tf.device(ARGS.train_device):

global_step = tf.Variable(0, trainable=False, name="global_step")

// Get images and labels

with tf.device("/cpu:0"):

images, labels = DATASET.distorted_inputs(ARGS.batch_size)

log_io(images)

// Build a Graph that computes the logits predictions from the

// inference model.

is_training_, logits = MODEL.get(

images,

DATASET.num_classes(),

train_phase=True,

l2_penalty=ARGS.l2_penalty)

// Calculate loss.

loss = MODEL.loss(logits, labels)

tf_log(tf.summary.scalar("loss", loss))

// Create optimizer and log learning rate

optimizer = build_optimizer(global_step)

train_op = optimizer.minimize(

loss,

global_step=global_step,

var_list=variables_to_train(ARGS.trainable_scopes))

train_accuracy = utils.accuracy_op(logits, labels)

// General validation summary

accuracy_value_ = tf.placeholder(tf.float32, shape=())

accuracy_summary = tf.summary.scalar("accuracy", accuracy_value_)

// read collection after that every op added its own

// summaries in the train_summaries collection

train_summaries = tf.summary.merge(

tf.get_collection_ref(MODEL_SUMMARIES))

// Build an initialization operation to run below.

init = [

tf.variables_initializer(tf.global_variables() +

tf.local_variables()),

tf.tables_initializer()

]

// Start running operations on the Graph.

with tf.Session(config=tf.ConfigProto(

allow_soft_placement=True)) as sess:

sess.run(init)

// Start the queue runners with a coordinator

coord = tf.train.Coordinator()

threads = tf.train.start_queue_runners(sess=sess, coord=coord)

// Create the savers.

train_saver, best_saver = build_train_savers([global_step])

restore_or_restart(sess, global_step)

train_log, validation_log = build_loggers(sess.graph)

// Extract previous global step value

old_gs = sess.run(global_step)

// Restart from where we were

for step in range(old_gs, MAX_STEPS):

start_time = time.time()

_, loss_value = sess.run(

[train_op, loss], feed_dict={is_training_: True})

duration = time.time() - start_time

if np.isnan(loss_value):

print("Model diverged with loss = NaN")

break

// update logs every 10 iterations

if step % 10 == 0:

examples_per_sec = ARGS.batch_size / duration

sec_per_batch = float(duration)

format_str = ("{}: step {}, loss = {:.4f} "

"({:.1f} examples/sec; {:.3f} sec/batch)")

print(

format_str.format(datetime.now(), step, loss_value,

examples_per_sec, sec_per_batch))

// log train values

summary_lines = sess.run(

train_summaries, feed_dict={is_training_: True})

train_log.add_summary(summary_lines, global_step=step)

// Save the model checkpoint at the end of every epoch

// evaluate train and validation performance

if (step > 0 and

step % STEPS_PER_EPOCH == 0) or (step + 1) == MAX_STEPS:

checkpoint_path = os.path.join(LOG_DIR, "model.ckpt")

train_saver.save(sess, checkpoint_path, global_step=step)

// validation accuracy

va_value = eval_model(LOG_DIR, InputType.validation)

summary_line = sess.run(

accuracy_summary, feed_dict={accuracy_value_: va_value})

validation_log.add_summary(summary_line, global_step=step)

// train accuracy

ta_value = sess.run(

train_accuracy, feed_dict={is_training_: False})

summary_line = sess.run(

accuracy_summary, feed_dict={accuracy_value_: ta_value})

train_log.add_summary(summary_line, global_step=step)

print(

"{} ({}): train accuracy = {:.3f} validation accuracy = {:.3f}".

format(datetime.now(),

int(step / STEPS_PER_EPOCH), ta_value, va_value))

// save best model

if va_value > best_va:

best_va = va_value

best_saver.save(

sess,

os.path.join(BEST_MODEL_DIR, "model.ckpt"),

global_step=step)

// end of for

validation_log.close()

train_log.close()

// When done, ask the threads to stop.

coord.request_stop()

// Wait for threads to finish.

coord.join(threads)

def autoencoder():

Train the autoencoder and saves the best model:

that"s the model with the lower validation error.

After Change

best_va = 0.0

with tf.Graph().as_default(), tf.device(ARGS.train_device):

global_step = tf.Variable(0, trainable=False, name="global_step")

// Get images and labels

with tf.device("/cpu:0"):

images, labels = DATASET.distorted_inputs(ARGS.batch_size)

log_io(images)

// Build a Graph that computes the logits predictions from the

// inference model.

is_training_, logits = MODEL.get(

images,

DATASET.num_classes,

train_phase=True,

l2_penalty=ARGS.l2_penalty)

// Calculate loss.

loss = MODEL.loss(logits, labels)

tf_log(tf.summary.scalar("loss", loss))

// Create optimizer and log learning rate

optimizer = build_optimizer(global_step)

train_op = optimizer.minimize(

loss,

global_step=global_step,

var_list=variables_to_train(ARGS.trainable_scopes))

train_accuracy = utils.accuracy_op(logits, labels)

// General validation summary

accuracy_value_ = tf.placeholder(tf.float32, shape=())

accuracy_summary = tf.summary.scalar("accuracy", accuracy_value_)

// read collection after that every op added its own

// summaries in the train_summaries collection

train_summaries = tf.summary.merge(

tf.get_collection_ref(MODEL_SUMMARIES))

// Build an initialization operation to run below.

init = [

tf.variables_initializer(tf.global_variables() +

tf.local_variables()),

tf.tables_initializer()

]

// Start running operations on the Graph.

with tf.Session(config=tf.ConfigProto(

allow_soft_placement=True)) as sess:

sess.run(init)

// Start the queue runners with a coordinator

coord = tf.train.Coordinator()

threads = tf.train.start_queue_runners(sess=sess, coord=coord)

// Create the savers.

train_saver, best_saver = build_train_savers([global_step])

restore_or_restart(sess, global_step)

train_log, validation_log = build_loggers(sess.graph)

// Extract previous global step value

old_gs = sess.run(global_step)

// Restart from where we were

for step in range(old_gs, MAX_STEPS):

start_time = time.time()

_, loss_value = sess.run(

[train_op, loss], feed_dict={is_training_: True})

duration = time.time() - start_time

if np.isnan(loss_value):

print("Model diverged with loss = NaN")

break

// update logs every 10 iterations

if step % 10 == 0:

examples_per_sec = ARGS.batch_size / duration

sec_per_batch = float(duration)

format_str = ("{}: step {}, loss = {:.4f} "

"({:.1f} examples/sec; {:.3f} sec/batch)")

print(

format_str.format(datetime.now(), step, loss_value,

examples_per_sec, sec_per_batch))

// log train values

summary_lines = sess.run(

train_summaries, feed_dict={is_training_: True})

train_log.add_summary(summary_lines, global_step=step)

// Save the model checkpoint at the end of every epoch

// evaluate train and validation performance

if (step > 0 and

step % STEPS_PER_EPOCH == 0) or (step + 1) == MAX_STEPS:

checkpoint_path = os.path.join(LOG_DIR, "model.ckpt")

train_saver.save(sess, checkpoint_path, global_step=step)

// validation accuracy

va_value = eval_model(LOG_DIR, InputType.validation)

summary_line = sess.run(

accuracy_summary, feed_dict={accuracy_value_: va_value})

validation_log.add_summary(summary_line, global_step=step)

// train accuracy

ta_value = sess.run(

train_accuracy, feed_dict={is_training_: False})

summary_line = sess.run(

accuracy_summary, feed_dict={accuracy_value_: ta_value})

train_log.add_summary(summary_line, global_step=step)

print(

"{} ({}): train accuracy = {:.3f} validation accuracy = {:.3f}".

format(datetime.now(),

int(step / STEPS_PER_EPOCH), ta_value, va_value))

// save best model

if va_value > best_va:

best_va = va_value

best_saver.save(

sess,

os.path.join(BEST_MODEL_DIR, "model.ckpt"),

global_step=step)

// end of for

validation_log.close()

train_log.close()

// When done, ask the threads to stop.

coord.request_stop()

// Wait for threads to finish.

coord.join(threads)

def autoencoder():

Train the autoencoder and saves the best model:

that"s the model with the lower validation error.

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 6

Instances

Project Name: galeone/dynamic-training-bench

Commit Name: 0a68639f4c33323274e7829b9349d0170dc6c8ea

Time: 2017-02-08

Author: nessuno@nerdz.eu

File Name: train.py

Class Name:

Method Name: classifier

Project Name: galeone/dynamic-training-bench

Commit Name: 0a68639f4c33323274e7829b9349d0170dc6c8ea

Time: 2017-02-08

Author: nessuno@nerdz.eu

File Name: train.py

Class Name:

Method Name: detector

Project Name: galeone/dynamic-training-bench

Commit Name: 0a68639f4c33323274e7829b9349d0170dc6c8ea

Time: 2017-02-08

Author: nessuno@nerdz.eu

File Name: train.py

Class Name:

Method Name: regressor