7fa458e2c4c5df5a9d2cc4e66b2472cd9f3377a8,python/baseline/model.py,Tagger,predict_text,#Tagger#Any#,207

Before Change

//lengths = zero_alloc(1, dtype=int)

//lengths[0] = min(len(tokens), mxlen)

data = featurizer.run(tokens)

lengths = data["lengths"]

indices = self.predict(data)[0]

output = []

for j in range(lengths[0]):

After Change

mxlen = kwargs.get("mxlen", self.mxlen if hasattr(self, "mxlen") else len(tokens))

maxw = kwargs.get("maxw", self.maxw if hasattr(self, "maxw") else max([len(token) for token in tokens]))

word_tokenizer = Dict1DVectorizer(mxlen=mxlen, fields="text")

char_tokenizer = Dict2DVectorizer(mxlen=mxlen, mxwlen=maxw, fields="text")

vectorizers = {"word": word_tokenizer, "char": char_tokenizer}

// This might be inefficient if the label space is large

label_vocab = revlut(self.get_labels())

batch_dict = dict()

for k, vectorizer in vectorizers.items():

value, length = vectorizer.run(tokens, self.embeddings[k].vocab)

batch_dict[k] = value

if length is not None:

batch_dict["{}_lengths".format(k)] = length

indices = self.predict(batch_dict)[0]

output = []

for j in len(tokens):

output.append((tokens[j], label_vocab[indices[j].item()]))

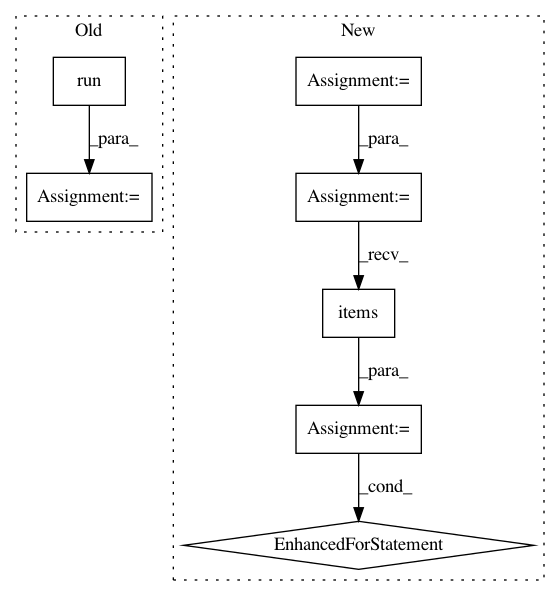

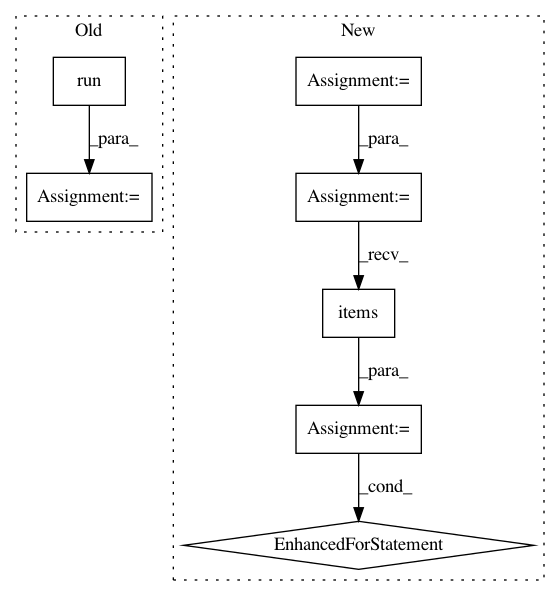

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 7

Instances

Project Name: dpressel/mead-baseline

Commit Name: 7fa458e2c4c5df5a9d2cc4e66b2472cd9f3377a8

Time: 2018-09-17

Author: dpressel@gmail.com

File Name: python/baseline/model.py

Class Name: Tagger

Method Name: predict_text

Project Name: deepfakes/faceswap

Commit Name: acc6553f80d17469bedfdcdab2ea676478a49d9d

Time: 2019-07-04

Author: 36920800+torzdf@users.noreply.github.com

File Name: tools/effmpeg.py

Class Name: Effmpeg

Method Name: get_info

Project Name: uber/ludwig

Commit Name: 360f6e8aee7989b7e649c21883026612964b9cf7

Time: 2020-03-06

Author: jimthompson5802@aol.com

File Name: ludwig/models/model.py

Class Name: Model

Method Name: batch_evaluation