6d3ec6c28f32560121d93b8fcab2cf7924f59dc5,thinc/layers/pytorchwrapper.py,,forward,#Any#Any#Any#,27

Before Change

else:

pytorch_model.eval()

x_args, x_kwargs = model.prepare_input(x_data, is_update=False)

with torch.no_grad():

y_var = pytorch_model(*x_args, **x_kwargs)

self._model.train()

return model.prepare_output(y_var)

y = model.prepare_output(y_var)

def backward_pytorch(dy_data):

d_args, d_kwargs = model.prepare_backward_input(dy_data, y_var)

torch.autograd.backward(*d_args, **d_kwargs, retain_graph=True)

After Change

pytorch_model = model.shims[0]

X_torch = xp2torch(X, requires_grad=is_train)

Y_torch, torch_backprop = pytorch_model((X_torch,), {}, is_train)

Y = torch2xp(Y_torch)

def backprop(dY):

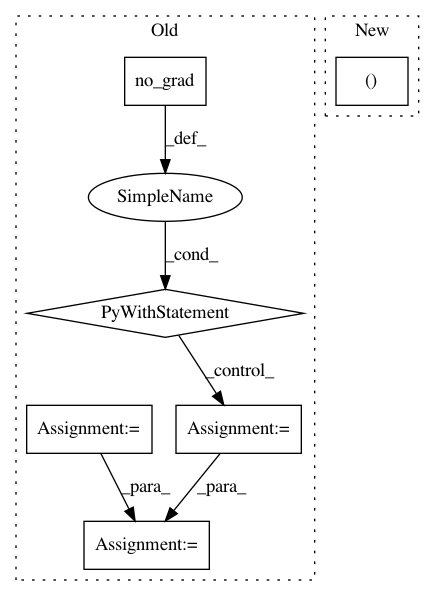

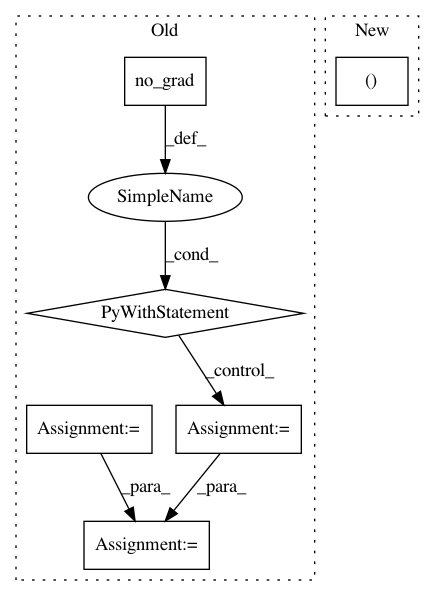

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 6

Instances

Project Name: explosion/thinc

Commit Name: 6d3ec6c28f32560121d93b8fcab2cf7924f59dc5

Time: 2020-01-02

Author: honnibal+gh@gmail.com

File Name: thinc/layers/pytorchwrapper.py

Class Name:

Method Name: forward

Project Name: elbayadm/attn2d

Commit Name: ef17941545c6d742de717d9769b2a412d9924e4e

Time: 2018-06-15

Author: myleott@fb.com

File Name: fairseq/sequence_generator.py

Class Name: SequenceGenerator

Method Name: _decode